Index to other relevant works

The

coming revolution in information science

Table of contents:

Chapter 1: What this Document is about

The Alterative to push advertising and poor user profiles

The Fire of Information Transparency

E-Markets, the technology and the first step

Scientific grounding and category technology

Commodity Transport and Informational Transparency

Ontological Modeling of Transaction spaces

Human knowledge transparency and privacy

Commodity Transport and Informational Transparency

Ontological Modeling of Transaction spaces

Appreciation, Influence, Control

Chapter 1: What this Document is about

This document is about a new approach towards

information science.

The first question may be about why a new

approach

is required. We will treat this

question quickly since there is a criticism of the current approach.

Information is defined as: recognizable form

that

is passed between receptive parts of physical reality. Yet

in computer systems, information has a

hard rigid nature that is often defined by professionals in an

extremely narrow

fashion. In fact, we assert that the

Information Technology (IT) profession is a profession that has gained

control

over the evolution of computer technology, and that this evolution is

now

moving down an dead end path. There are

several reasons for the divergence.

This profession is often exhibiting agendas that are exclusively

for

the profession, and thus the utility function is not defined purely and

jointly by the nature of computing and the nature of human needs. The new information science is partially a

consequence of a declaration of independence from this profession’s

control.

The declaration of independent is only part of

what

is happening. There is also a deep

revolution in the underlying technology, and in the social

understanding of

this technology.

This document has scientific context. The scientific context is often about the

measurement of subjective evaluation.

This subject measurement, and the models that are developed from

such

measurement, are often not precise and representable in numerical form. In human systems, information is softer,

more complex, and has qualities that cannot be captured easily using

the

current approach. There are limitations

to the current approach. One reason for

this limitation is that natural information is often qualitatively

different

from computer-based information. Some

times this is not important, but sometimes this difference means

everything. For example, the recognition

of novelty, as experienced in he world, is very difficult for

information systems

based solely on pre-existing data design.

Why would this document be of interest to most

people? The answer is simple. The

new approach has features that could

transform the current software and Internet processes. The

transformation vastly simplifies

computer theory as well as eliminating ownership over the

infrastructure and

the core programmatic components. This

approach is consistent with the rules of the current Internet, and has

stealth,

while still being transformational.

A conceptual alternative is being introduced

based

on a shift in how information flows in the Internet.

What is the key principle? Currently most

Internet

information like email is pushed, except with the use of search engines

like

Goggle. The conceptual alternative is

to enhance the search capability through the use of formal models of

information called computational ontology.

The current IT has outsourced the development of ontology to

highly paid

salesmen. The alternative is enabled

when any individual may be given simple to use tools for producing

models of

information that her or she is interested in.

The models, as will be explained below, go far beyond the not so

satisfactory user profiles.

The Alterative to push advertising and poor user profiles

We have proposed the use of the .vir extension

to

create a Internet that has certain design principles, including a back

plate.

[1]

We conjecture that if this .vir alternative is allowed to compete with

the

current Internet

that the transformation will be

due to a legitimate competition. Thus

free markets are expected to be part of the solution.

The

alternative is very much more individually centric in ways that

have to be seen, and/or explained in cognitive and behavioral science

terms. The explaination will be hard

for most to follow, but the systemic behavior of the alternative will

be

intuitive and easy to use. Structurally, the

current Internet is controlled by push

mechanisms and responses to push mechanisms.

These mechanisms control global behavior and global behavior

shapes individual

behavior. Pull is not enabled. For

example the individual is not given the

tools to block spam and unwanted advertising.

The required tools have existed for over a

decade,

and are designed into the primitive functions of the .vir alternative. The use of these tools is easy to grasp and

have transformational effects on what can be expected by each

individual. The .vir tools will allow a great

control

over one’s own information space. For

example, one’s means to control one’s information space is enhanced by

placing

a shield between one’s self and advertising.

Paradoxically, the new safe Internet sub-system

promises to protect digital property in a way that is unexpected and

very

powerful. The two sides of transparency

are exposed. For owned digital property

we wish to allow the owner to control usage via an internal

instrumentation of

each digital object. This

instrumentation establishes a strong foundation for commerce and the

design of .vir business processes based on commodity and product

consumption. This tool, internal use

instrumentation,

provides the original owners of digital objects such as digital video

property

the ability to record when and how the object is used. This

transparency is provided to a

micro-banking system via information exchanges restricted to insure

only that

digital object has been properly licensed.

So no private information is given up.

The transparency is limited in a specific fashion by the

technology, and

as a matter of fact by the civil and criminal laws.

We are proposing an organized and safe Internet

sub-system. This sub-system will exist

along side the other Internet. No

imposition of any new rules is made on the existing Internet. The safe Internet subsystem will have more

individual control, will provide limited transparency over digital

property

use, and will provide an evolving map of markets. The

concept is called differential transparency. For

example, the safe sub-system will have a

high degree of transparency over the types of informational objects

that are

moved around. Push advertising will

exist only between ad producers and a repository of ads.

Once in the service repository the ads are

never pushed, except in direct response to a consumer request. Pull information services will be requested

on the behalf of individual consumers using information structure

produced by

the consumer. The consumer is always in

control of differential transparency.

The control of information flow by individuals

will

also allow the archiving and management of television programming, but

in ways

in which the management of ad content will be under the control of the

individual. Content storage and

management will allow the recording and viewing of any television

content at

the convenience of the individual.

The Internet infrastructure will be used to create, within the sub-system, a certain small change in the economic system worldwide. Such a system will defy certain parts of the present economical and political powers. However, it is possible that such a system could be useful in adjusting imbalances in the current economic and political systems. So the stakes are high. Value is seen by many categories of economic and political stakeholders.

Market acceptance

Very rapid worldwide acceptance of the new

system

is possible. It is merely a question of

preponderance of economic force. The

old information science, what we call the first school, is like the

oil-based

industries; and will do what they can to block the knowledge that there

is a

new school of thought. [2] The markets experience the preponderance of

economic force when there is a tipping point, and part of this tipping

point

depends on informational transparency.

The foundational work takes certain specific

technology innovations and combines them in such a way as to enable a

rapid

establishment of new principles governing worldwide commodity transport

and

information exchanges. The innovations

are consistent with a specific economic philosophy that is being

defined as a

worldwide movement. The core

technologies are those, like the electric car batteries and other

components,

were fully developed in the 1990s but were marginalized by the database

vendors

and by the many consulting industries that depend on a constantly

shifting

foundation to the installed software base.

Capitalization

We realize that without

proper capitalization

any serious attempt at changing market behavior will be swamped when it

starts

to be successful.

One

key to the capitalization of OntologyStream Inc and VirtualTaos is

the Encapsulated Digital Object (EDO) architecture. The

EDO architecture is discussed in confidential presentations,

directed at acquiring capital to build out the .vir subnet. These discussions can be shared between

participating scholars and whose innovations are being protected with

patents.

A number of specific breakthroughs must be capitalized at the same time, for to allow this set of innovations to come out without capitalization could doom the new science to an early grave. While we wait we are looking for a new way to simplify a statement of principles. The reader is asked to bear with us, and realize that the assertions, proposition and technology that we are offering are developed from a deep and fresh view of the nature of human and computer information. What must be explained seems paradoxical, and yet is not paradoxical if looked from the new perspective.

A mechanistic behavior will protect viewpoints that are strongly attached to the status quo. There is a lot of value that comes from this protection, so we are hoping that the reader understands that we are merely pointing out something that seems to be factual. We are pointing out that the energy required to understand the depth of this transformation requires some real dedication, particularly because there are not clear demonstrations, as yet, of how this new paradigm will work.

One has to see the complete picture, and many parts of this picture only exist in theory. However, we have often noticed that when a defensive position is taken it is often NOT because the person is not knowledgeable. A defensive position is taken because the new concepts are at strong odds with some, but not all, of the current accepted viewpoints about advertising, economics or even politics. These viewpoints often have moral judgments associated with them. So the paradox is that a proposed decease in the importance of advertising may be seen as anti-American, for example.

The work that the second school is presenting is founded on enumerated principles. These enumerated principles are grounded in natural science, including natural science of psychological and social nature. There is an underlying foundation for rapid acceptance based on each individual’s feeling about what might be possible if the current trends towards Internet based commerce continue. We do examine this carefully.

The core element to the coming revolution in information science is transparency.

Even this term, transparency, is subject to a requirement to define, particularly in a society where the concept of transparency has become complicated and burdened with ambiguity and often with purposeful distortion. Is the current advertising paradigm providing quality information to consumers about what is available to purchase? Is this information transparent?

The Fire of Information Transparency

An

email titled The Fire of Information Transparency was sent to me

by one of the leaders in the topic map standard.

The Web's

disruptive power has burned away a lot of small-time stuff,

dis-intermediating

various institutions and people. (Tim

Berners-Lee is a kind of Prometheus, because he and his friends figured

out how

to start that fire.) However, that fire

hasn't reached the temperature required to dis-intermediate the walls

of

secrecy between the people and their government, and it isn't likely to. Tim's fire is already dying down, and

Congress is currently quenching it vigorously, by killing Net

Neutrality, etc.

What's needed is

for transparency to become an everyday fact of life. The people will

have to

live with it and like it, before they will cast aside fundamental

assumptions

like the necessity of secrecy in public affairs, or the idea that

government

policy is always on sale to the highest bidder, or that any human

knowledge can

be private property. Revolutionary

change will occur when the people become indignant that their

government isn't

operated under the same kinds of transparency that already rule their

everyday

lives.

So, I think that

what you and I should be doing is focusing our efforts on changing the

environment in which *all* human affairs are ongoing, by creating the

*option*

of transparency. I think the availability

of such an option will make a high-temperature fire inevitable, like a

long

drought in a wooded area. A transparent

economy will always outperform an opaque one.

In a transparent environment, the soviets, er, neocons, er,

statists now

in power in Washington will be unable to compete. Neither

their money, their militarism, nor their brutality can

save them from such a fire.

As people become

more comfortable with transparency, it will penetrate everything. As it picks up momentum, it will achieve a

very high temperature and be very disruptive.

It will burn away a whole lot of inhumane, brutal, oppressive

shit, and

human productivity will soar. (It's going to *have* to soar if we're

going to

get the climate system, and everything else essential to our survival,

under

control.)

Yeah, but what

should we do? Well, I think anything

that increases transparency helps. And

I suspect that what you're up to has a good chance of increasing

transparency. I would encourage you to focus on

tending that

fire, and to try to avoid compromises and distractions that might tend

to

reduce its temperature. Concentrate on

achieving a high enough temperature that, as in the Bessemer process

used in

blast furnaces, the dross stops being inert and becomes fuel.

The sentiment is shared

by some of the best

innovators in information science. Many

innovators want to create a critical mass so that the work we do for

pay is the

innovations that we talk about privately, rather then on technology

standards

that we do not believe in.

E-Markets, the technology and the first step

The concept of an

e-market for the artistic

production of Taos and surrounding areas could well provide the needed

stepping-stone for the establishment of the second school.

The nature of artistic expression is

reflected in a proposed distribution and marketing system.

The proposed system has deep

political support within the Town of

Taos government, from artists and galleries and from leading citizens

of Taos. The business value

from developing the Taos New Mexico e-market in artistic product is

very

important to examine, both short term and long term.

The second school’s technology shifts the focus away from products offered by large corporations. This shift from corporations to individual producers is a key element to the .vir technology. The quality production by an individual becomes visible to precisely those consumers who are interested and are able to purchase. This visibility is to be instrumented by the informational transparency we are talking about. The transparency is differential, controllable and rational. Differential visibility seems inevitable and thus the .vir subnet will be seen as an continuation of systems like e-Bay and Amazon but with a specific new underlying capability not possible in the e-Bay and similar Internet systems.

OntologyStream Inc has an investment potential is based on our ability to establish new standards. These standards are ready to be put in place. Our standards fit with existing and anticipated OASIS SOA [3] standards. Technical papers are available on our standards work. Because of maturity, the investment schedule is not typical, since OntologyStream Inc and related efforts is now over a decade old and has considerable IP and political capital.

One can imagine a business ecosystem where commodities are passed from individual to individual within value chains supported by just in time inventory mechanisms such as next day delivery by UPS. OntologyStream Inc is properly branded and has significant isolated IP so that this business ecosystem could emerge in a matter of months. We start with the art markets due to my position within the Taos art community, and then we extend the .vir system using standards and enumerated principles.

Enhancement of markets

How can we enhance current systems and practices in order to more fully support this ecosystem? The answer is informational transparency. There is a limit on the degree of informational transparency unless information is actually creating the meaning of information, in context. There are needs to limit transparency once it is obtained and to develop a legal protection based on judicial review, not computer security programs. There is a need to instrument the protection of digital objects so that court actions are clearly differentiated. There is a need to severely dampen push advertising and spam. There is the need to declare independence from the programmers and the IT vendors.

These features of the .vir reality shifts the utility function underlying purchasing decisions. If only the advertising agencies and software engineers are designing information structure, then the market evolves in a specific fashion. The evolution is towards supply side markets. The markets sales what someone decides to produce, and not necessarily what would be wanted if a more informed choice were given. Both the supply side and the demand side markets will co-exist and benefit from the .vir subnet. A shift in the origin of information design is the key to modeling commodity and consumer interests. If consumers are designing information about what is wanted, rather than the ad agencies designing information about what they make money on selling; then there is a over all reduction in cost and an increase in perceived value to the consumer. These models help reduce costs in distribution by reducing uncertainty.

Informational transparency allows any person to see how systems, and systems of systems, are evolving in real time. In systems theory we talk about the changes in a system as being partially due to the interaction rules and how these rules are realized. The notion of a utility function is used. It is true that a full understanding, of what a utility function is, may take years to acquire. However, on the surface there is a common sense understanding that any system’s utility function involves the push of system dynamics and the pull of some type of template or normal categorization process. The common sense understanding is correct.

The results of an economic system’s utility function are seen in the behaviors of the system. Without being too judgmental, perhaps it is reasonable to say that if the utility function is driven by a demand for increasing consumption and push advertising, then the system tends to be parasitic and blind to the damage that is done. The reason is simple. All value is considered to be locally realized and thus the global or distributed needs are left without economic support.

The use of language in the previous paragraphs is suggestive of how the second school founders have combined very advanced category theory, developed from biomathematics and biological science, with a new type of ontological model formalism. [4] The difficulty has been in allowing a single venture group to grasp the nearness of a technology, based on our results. We have to demonstrate that the .vir subnet can actually exist as Internet infrastructure. But we cannot demonstrate results without actually having the system deployed and used. This next step can be achieved and sustained with investment. This investment creates the software supportive of Virtual Taos e-Market for artistic production from Taos New Mexico.

Scientific grounding and category technology

A scientific grounding on how natural categories form and are modified is a core part of the second school. From the natural science we observer objectively, and in a way that is verifiable, that natural categories [5] tend to develop supporting systems. The mechanisms associated with the category create a system that has stability. A hot new item in the market has a self-sustaining nature.

How does one model the onset of new category? How does on model modification? What does one put into the front windows of the store? If an automated system has specific validated information regarding the demand for commodity and also for information, then the front window in e-markets can be displayed so as to respond to individual interests.

Due to specific technical errors, which we will discuss, the value of W3C standards based ontological models [6] is diminished considerably. Due to a number of factors, including US Federal CIO Council judgments, there was a selection of W3C’s Ontology Web Language standards rather than Topic Map standards. The new category theory works with Topic Maps. A large number of technical documents on these issues are available as well as PowerPoint presentations.

The behavioral claims of the second school are:

1) Proposed second school standards for knowledge representation (as computational ontology) contributes to the mechanisms that support information transparency

2) The human to computer interface for e-market activity can be separated from the rest of the internet by requiring a .vir standard to be available to any properly defined computational service.

3) A systemic harvesting of localized ontology models is possible

4) The aggregation of localized information into categorical/ontological models can be taken through a process where personal identification is left behind

5) The aggregated information structure is then used to produce various models, including an evolutionary model of consumer interest in context

6) These models can be used to arrange e-market store fronts, and to develop a repository of information artifacts to be requested by web-services

7) The .vir subnet can be built incrementally starting with Virtual Taos

With high quality informational transparency, the individual entrepreneur is empowered to produce products that are not only competitive, but which have qualities that the products from corporations cannot claim to have. Using Topic Maps, this type of interaction is superior to that achieved by e-Bay and Amazon.com.

Human knowledge transparency and privacy

In our work we have focused always on how there might be a global solution to core problems found in the current information science. The solution stands on the complete rejection of the academic discipline of artificial intelligence. The alternative is derived from what is often called complexity theory. As in all second school advances, the first school quickly developed a polemical structure that acts to discount the insight that is being made available. This is also true with the academic discipline of complexity theory, so one has to be careful.

The key to the second school literature is the work by Robert Rosen (1932 – 1998) and his definition of complexity. Rosen’s work is extensive and focused on modeling biological processes using category theory. Rosen’s work defined complexity as being something that is not reducible to algorithmic expression. Far from being a circular argument, Rosen’s definition starts by asking if natural reality is complex. His answer is yes, nature of always complex and computation algorithms always simple (and sometimes complicated).

Rosen argued that if natural reality is complex, then human internal experience is separated from the external world by a barrier that is not algorithmic. Mathematics and logic is seen as an extension of the human cognitive production, and not as de-facto physical reality.

Using Rosen’s definition, the value of the Internet becomes clear in a way that cannot be clear in the first school. The value is derived from recognizing that human experience and computer programming are categorically separated, and has no properties that are same as. Human experience cannot be reduced to algorithms. Thus the human in the loop becomes essential to a full use of the Internet.

In an optimal economic system, human interaction should produce ontological models of experiential reality. What do I want? What is good for me.

Using ontological models, what we see is the behavior of computer-based processes, not the internal reality of individual humans. We see abstractions and without mistaking these abstractions for full and complete natural reality, we are now in a position to more fully use the Internet. We claim that human wisdom is a by-product of individually owned transparency about economic decisions. Using the .vir standards, wisdom replaces fantasy and confusion.

The massive funding, perhaps as much 80 Billion in direct federal funding over four decades, has created a warped scientific discipline supported now by hundreds of PhDs trained in a narrow fashion to believe that algorithms can think and even feel emotions.

By the early ‘50s, the old and vague question ‘Could a machine think?’ had been replaced by the more approachable question, ‘Could a machine that manipulated physical symbols according to structure-sensitive rules think?’ This question was an improvement because formal logic and computational theory has seen major developments in the preceding half century. <Etc, etc> . . . This insight spawned a well-defined research program with deep theoretical underpinnings

Paul and Patricia Churchland 1998

Technical arguments given by myself, and many others, shows the major AI developments as being mere hat tricks that ignore the neuroscience, states as irrelevant the work on cognitive science, biology and in systems theory. However, artificial intelligence was funded and was consistent with reductionist science and hard core capitalism. So we have authoritarian academics who argue based on the false notion that abstraction is a physical symbol. For the complete arguments against this and to find the development of the foundations to the second school see the on-line book, Foundations for Knowledge Sciences. [7]

The key is transparency, and from transparency the possibility that responsibility might evolve. Transparency does not mean the setting aside of individual rights, property laws, or legitimate natural security needs. Transparency does not mean a right to look into private matters. Transparency supports due recourse to legal redress, in cases of misdeeds. Of course, the historical cannons of law still preserves respect for the privacy of others. The problem, as every one seems to agree, is that the current confusion allows economic power to trump moral law.

We propose that a subnet of the Internet be dedicated to safe transmission of business exchanges, and that these exchanges allow a high level of transparency. This subnet is referred to as the .vir subnet. The standards to be enforced in the .vir subnet empower any individual to develop ontological models about what her of she wants to learn, to acquire, or about what is available. These ontological models are streamed as digital objects between service interfaces. The interfaces follow OASIS and IEEE service oriented architecture standards.

Beyond the initial start-up of Virtual Taos, the horizon has interesting investment potential. Technologies supporting the separation of pull and push information have been framed out. Investment is sought for this technology development. The issue of individual liberty and information privacy is addressed in a way that is surprising. The issues of content management and digital property rights enforcement has also been addressed, and again in a way that is surprising.

Advertising, individual liberties, information privacy, content management and digital properties rights are addressed in our proposals. National security and commodity transaction mapping is also addressed. [8]

Differential Transparency

There are two sets of issues that the reader may keep in mind. The first set of issues is related to what second school technology allows to be developed. The second set of issues is related to the moral and legal aspects, particularly those related to liberty and information security.

One core objective of the second school is differential informational transparency. In comparison, the core objective of the first school is the enforcement of a single global viewpoint for the purpose of centralized control. One should understand that centralized control is asserted as necessary in order to keep social order and economic health. However, the enforcement of centralized control must ignore individual creativity. One may also have the viewpoint that most humans on this planet are in deep economic trouble. The excesses of capitalism and petty dictators have degraded the world’s environment. The flow of commodities is exceedingly wasteful and the threat of mass destruction by war, terrorism or natural disaster is everywhere present.

From the second school’s perspective, information technology can be simplified by placing many, or all, individual humans in a better position to exercise a very high level of control over his/her private information spaces. If only extremists do this, then our populations will suffer. So the development of a ubiquitous human and process centric information technology may be the most essential task that our civilization faces today.

There are advantages to our society that come from the empowerment of each, and every, individual to control the types of information that personal digital systems acquire from an environment. But if this empowerment is given without some degree of transparency, then the world will become more dangerous and the loss of our liberty will become more likely. So we are faced with paradox.

The second school technology is based on the use of ontological models developed by the individual. Each individual creates one or more of these models through a process of evolution. The models then act on the behalf of the individual. Great technical knowledge is not necessary to build the models, only the use of a new set of tools. Second school ontology building tools are considerably simplified due to our use of the topic map standard.

We claim that the second school is based on natural science, and this natural science can be made available to anyone. This claim is a complex one, given the nature of mass education and the questionable outcome metrics on the K-12 programs.

The differences between the first and second schools are at the level of foundations and assertions. In only rare cases, so far, has the second school viewpoint survived the K-12 programs, or was developed by individuals due to special circumstances. Around the world, there are groups of innovators who if organized and funded have the capability to quickly make personal knowledge operating systems easy and understandable. Such an advance is possible because of the lessons we have learned during the past three decades. For example, those of us who rejected the AI myth long ago have developed methods that are combined easily.

Bottom line: An implementable system is fully designed and we are ready to implement the .vir standards.

Instrumentation within all digital objects, existing in the .vir subnet, by second school technology allows an automated aggregation of use patterns and model information. The Internet is not instrumented and this fact contributes to the individual consumer cannot being able to control information spaces.

Digital Property Management

The instrumentation of all digital objects in a subnet of the Internet will allow a shift in common use from the old Internet to a new one. The development of e-commerce in the .vir subnet supports responsiveness from production systems to changes in demands indicated by changes in individual models. Digital property rights are secured in a simple fashion using instrumentation by the digital object itself. This is done best if the object is generative, as is the case with fractals. [9]

Let us look at the near term. A solution to the digital property rights issue is transformational of the entertainment industry. It is reasonable to look to the creative expression of artists as a representative of the type of intelligence that seems missing in modern social system behavior. An artist is often concerned with creativity in the moment, while trying to capture some emotion, to tell a story or to charm an audience. In the current market place, the artist is not entirely free due to a number of imposing issues.

The two missions, creating the second school and creating virtual markets, merge when one takes the viewpoint that human creativity cannot be reduced to formal algorithm. The first school makes the assertion that human intelligence is reducible to formal algorithms. We seek to empower a revolution in the entertainment industry that changes the utility function underlying the choice to purchase.

Our technology produces localized informational transparency separately from global informational transparency. Individual informational viewpoint involves an interpretation. The development of rational coherence is based on individual differences. Category formation is involved. In natural category formation there are two processes, one integral and one differential. Using integrative and differential considerations we are enabled to understand the nature of coherence and viewpoint. Let us talk about this.

Cultural coherence is integrative of many viewpoints, often by leaving out distinctions that some individuals feel strongly about. Thus the source of both social and personal discordance is rather strongly associated with not achieving an integration of viewpoints that is at the same time differential, e.g. as allowing individual differences. Localization of information requires the management of the notion of coherence and viewpoint by an actual human individual. Localization of experience carries with it the development of coherent views of momentary experience, and within this localization one finds the origin of artistic creativity and appreciation. Global informational coherence operates on similar principles but with different mechanisms.

Global informational transparency requires a real-time aggregation of natural categories of expressions, occurring locally, into a single complex where coherence and viewpoint are often utterly missing. Otherwise the differential representation of various multicultural viewpoints is subsumed by a single dominant viewpoint. This aggregation preserves both integration and differential by reflecting natural category formation in the kind of computational processes underlying the formation of ontological models. This aggregation process is discussed in detail in Foundations.

We have substantial evidence that reasonable capitalization of our technology and our approach will bring a new generation of products. For example, OntologyStream Inc has techniques for developing computer based ontological models that do not have a global single imposition of pre-established coherence. Coherence is given in a late binding process where there is inference, but this inference is separately defined as part of situational, individual or cultural perspectives. These techniques are based on principles derived from Soviet area computational theory and on a historical literature in the theory of sign systems, semiotics, and in theoretical biology. The techniques depend on the definition of choices to be made by informed humans. This means that software engineers cannot made judgments on the behalf of others. The choices that human make can be mediated by choice point and blueprint standards driven computational processes. These standards are part of the .vir standards.

Second school notions about global and localized coherence and transparency are grounded into the precision of scientific discussion. This may help to heal the chasm that has developed between the arts and the sciences. It is perhaps fair to say that the sciences have been overly integrative. The arts celebrate diversity. Informational transparency over all human knowledge is sought by comparing individual acquired information to some type of objective grounding in real world phenomenon. In this way, the mediation of viewpoint seeks a commonality that is structural in nature. The second school provides foundational theories as to how individual viewpoints form and under what conditions do these individual viewpoints interact with each other in social settings. Methods are developed that ask the individual to observe the processes where by he or she feels and thinks. These methods are both meditative and integral and placed into a context that is differential, seeking to see the multi-cultural-ness of human knowledge.

Artistic notions about minimalism are relevant to a differential between coherence and viewpoint. Minimalism in artistic production creates a minimal form that becomes alive during the act of perception. The act of experiencing the art is interpretative and establishes individual perspective at the time of interpretation. Our focus on artistic expression can also be generalized to an understanding of how entertainment is produced and distributed, given the ability of the individual to define what kind of entertainment he or she is most interested in. The formation of minimal categorical representation follows minimalist traditions that are well expressed in the scholarship on human perception.

There are technical means to assist in developing minimalist representations of integral and differential knowledge, both by individual humans and by communities. In natural linguistic process, viewpoint mediation is most often seen as the reconciliation of terminological differences. In computational processes, we suggest that this mediation will occur via the actions of web-services and computational ontological structure. Our work in natural category formation provides some details related to an encoding of characteristic behaviors and what we have called anticipatory knowledge. These details are fully described in published materials.

A final word on

the use of differential transparency to effect the promised change in

world

wide economic transactions. The

separation of information flowing from advertisers to consumers enables

a safe

environment within which to instrument the pull mechanisms needed for

second

school technology. The pull mechanisms

depend on an instrumentation of transactions made between types of

individuals

and the space of commodity and informational exchanges. The

purpose of the second school technology

is to allow the individual to develop information that he or she can

validate

about what services and what commodities her or she wishes to purchase. This kind of personal knowledge is to be

reflected in individual ontological models, developed with the aid of

new

tools.

Commodity Transport and Informational Transparency

The development of a distributed market, world wide, based on commodity transport and informational transparency is possible. [10]

Many processes are anticipating the .vir subnet of the Internet. There are currently major developments in individual-to-individual commerce using systems like e-Bay. Most of the commodity transport is driven by corporate commerce. Governments have an increasing responsibility to monitor commodity transactions that occur across national boarders. The movement towards import/export inspections is driving the development of producer-to-consumer monitoring of commodity movements.

The technology we have developed uses an internal instrumentation of all digital objects existing in transport in the .vir subnet. Digital object instrumentation produces a decentralized measurement of information associated with commodities. The instrumentation records usage and makes periodic reports into transaction monitoring systems serving various enterprises. A further aggregation of monitoring information is aggregated into a global model of all commodity transactions. This model becomes useful in the reduction of wastes in the acquisition, movement and use of commodities.

The real time information provided by the system I designed for U. S. Customs in 2004 provides input to the measurement function enabling anticipatory reactions to patterns in the worldwide commodity markets. [11] This type of commodity monitoring is essential to protection against terrorism, and also to the establishment of a reduction in waste within the global manufacturing processes.

For example, a group of individuals living in geographically separated regions may join together to create a manufacturing process that reclaims automobiles from junkyards. Basic chassis and other parts are used with new components. Rebuilt automobiles can be designed and produced with new hybrid electrical-combustion systems.

The movie, Who Killed the Electric Car should be reviewed in order to establish some history. We are no longer in 1996, when the automobile and oil industries conspired to take the existing fleet of high quality electric cars off the street crush them. [12]

The current market in after-the-market car parts is already a multi-billion dollar industry. Federal tax credits plus some type of popular fad might end the production of non-hybrid cars. The .vir standards would be instrumental in a transformation between oil based transport and electrical based transport. Layered transparency provided by encapsulated digital objects (EDOs) creates a certainly in the production side of new market places. For example, the rebuilding and conversion of a certain year and make of junk automobile into hybrid gas-electrical transports may benefit by an inventory of source components.

The global identification of component parts is then tied to the manufacturing of new components. Information on new component production is then combined with parts obtained from junkyards. These re-built transportation vehicles may cost half what a new car costs and yet have a ten-year warranty. Such warranties are already being developed based on e-Bay consumer reports. For example, localized manufacturing processes, in several states, may collaborate to insure the performance of a drive train.

Our business case is based on the conjecture that a new world-wide production system, based in individual-to-individual supply chains, depends only on the transport of commodity and the human knowledge sharing enabled by ontological models. The emerging production and distribution system is aided by a radical decrease in marketing cost, due to the informational connectivity enabled by the Internet. The anticipation of commodity availability is enhanced. Stability and dependability does result in the marketplace.

If this second manufacturing revolution is to develop, the information structure itself has to have the type of instrumentation that OntologyStream Inc has developed for video, audio and gaming software. Ontological models themselves need digital rights protection afforded by a human knowledge distribution system based on EDOs. The human touch within layered monitoring systems provides places where human or human community decisions both verify and make use of real time aggregated information about commodity flow. A corresponding management of information flow is necessary if individuals are to perform complex manufacturing processes in home or small facility environments. This management of information cannot be based on advertising and predatory behavior, as is so often exhibited in the current Internet. The flow has to be driven by individual manipulation of private information spaces where learning materials and commodities are considered to be co-equal in value. Examples include micro-farming activities similar to organic farming and vineyards.

OntologyStream Inc has worked on the underlying EDO technology for two decades, while we also watched for an opportunity to transform production and consumption systems. Incremental adoption of EDOs in fine art markets, digital video markets, movie markets and digital game markets allows the technology to gain acceptance and to grow roots. Incremental adoption works in favor of a series of business innovations, starting with the art markets, then with the digital video and digital game systems, and finally with EDOs having high value ontological models related to manufacturing processes. The educational market is affected when digital forms of scholarship can be created and distributed individual-to-individual with a less intrusive intermediating marketing system.

The e-market centered about artistic production from Taos New Mexico is the perfect market to develop a linkage between a transport of commodity system and a human knowledge sharing system. By starting with the distribution of artist authorized digital images of fine art form the Taos New Mexico markets, we establish a principle. The distribution system includes the offering of a digital extension portfolio. Each portfolio has one copy of each of 20 images. The extension portfolios are then used to show friends and family the images. Sales occurs using the Internet and production occurs on a just in time production allowing the next available number to be assigned to a size and medium, on fine art paper or canvas.

Ontological Modeling of Transaction spaces

To develop the .vir market properly, we need the kind of transparency that comes from ontological modeling of real time social discourse. The public does not need to understand the technical details, but the .vir system does need to develop specific architectural processes within the .vir subnet.

The issue of public understanding is not simple but is manageable. The grounding principles are expressed into curriculum, and this curriculum is available as learning system content via the Internet and DVD. The steps that we are taking now are the smallest steps that can be made given the resources we have and still actually keep the .vir dream alive.

The development of video sharing between wisdom study groups is a key to the educational and deepening process that is involved in the next business steps. Please excuse my moving back into a technical discussion.

Robert Rosen’s work is one of the key foundational works of the second school. [13] The technical definition of Rosen complexity is simple. Any natural system whose behavior cannot be fully modeled by algorithmic process is said to be Rosen complex. The social discourse is a type of transaction space. This transaction space is properly complex in the Rosen sense.

The second school asserts that social discourse is Rosen complex and thus that any high quality model of social discourse needs to have human involvement in the creation of information structure about the social discourse. Web-based service transactions are only simple if they are very basic, and this is where the first school service oriented architectural standards of the W3C runs into difficulties.

In the second school, human services are provided in the .vir network by including the human in the design of information and in the decision making as to how information is moved within the system. The individual-to-individual production process already exists in many ways, but is not aided to the extent that is required by informational objects having protection for value made as encapsulated digital property. As a consequence, the quality and relevance of information is not as high as it will be in the near future.

We again repeat the argument that encapsulated digital ontology is needed if virtual conversation and collaboration is to be elevated over what we have now. The argument that economic systems have a hidden hand is the same as the argument that system control can be modeled as a formal utility function. The argument supporting the .vir architecture is that significant long term changes to world markets is occurring due to individual-to-individual communication transactions and to the maturity of the commodity transport systems, world wide.

The technology for ontological modeling of real time social discourse was demonstrated for the first time in history, in 2002. The J-39 system, a then not classified US intelligence system, was designed in part by myself. The basic components were word and phrase co-occurrence coupled with linguistic and ontological modeling. A significant human in the loop component was involved.

The BCNGroup digital Glass Bead Games are designed as a radical improvement over the J-39 system and provides a real time measurement of categorical patterns occurring in social discourse. The improvements are in a more profound use of formative category theory and in providing more control to human in the loop decision-making. There is also an architectural change that uses the data structures defined in the Notational Paper. [14]

Perhaps the first thing to keep in mind is that computer science has been altering the long established behavioral and functional elements experienced by human systems. An important example has to do with the role of re-enforcement. Direct experience is more often replaced by authoritarian imposed consent that the system, the information system, is designed right. Some are now arguing that the future safely of the world is dependant on creating a centralized authority for all information design and transactions in the Internet. This argument is gaining in power due to the need by corporation structures to establish new markets.

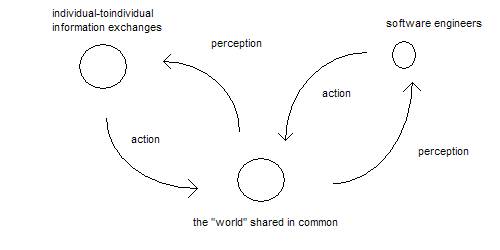

Figure: Diagram explaining the proposed shift in control over information structure

It is not merely a question of who is in control. We conjecture that if decision-making has no direct re-enforcement mechanism then the quality of decision-making will collapse. Optimal responses to natural disasters may be possible within a decentralized control utility function based on the architecture of the .vir subnet of the Internet. A second set of conjectures develops the hypothesis that the cyclic or action and perception involved in human decision-making is controlled by the design of information.

How good can algorithms design information without human mediation?

Can algorithmic mechanisms feel the consequences of making a mistake?

Neural network and genetic algorithm programs have what is called re-enforcement leaning and much of this work is exceptional for a great number of reasons. We cannot review this literature here. [15] The core issue, however is regarding the deployed database-type systems where algorithms are not altered due to the kinds of re-enforcement that is involved in shaping human behavior. The algorithms and the data structure is designed by professional and business interests, and does not reflect the needs of individual-to-individual information exchanges. In most current cases, the information system’s information design comes from database engineers who are not experiencing the consequences of the design in real time.

In the .vir subnet, using the .vir standards, differential transparency is established because processing and memory mechanisms do not encode the actual data that is used to aggregate the measurement. The restriction is structural. The .vir processing and memory mechanisms CAN NOT encode the actual data. There is simply too much data to store. [16] The memory and recording situation is similar to the requirements of the brain system to provide memory. A memory of all of experience is simply not possible unless the awareness of the past is generated using an aggregation of invariance, colors, shapes sounds, emotions, conceptual association and the like. So the conjecture is that a generative mechanism is involved in the development of cognitive awareness. The .vir standards mirror the results form quantum-cognitive neuroscience.

Of course, the .vir technology is simple to use. The methods, invented by myself, called categorical abstraction produces a topic map that can then be used to show how social discourse is changing as a function of time, location and cultural identity. The methods of categorical abstraction are available as open source literature published by myself (1998- 2003).

Information transparency provides the mechanism for developing abstracted categorical information that can be pulled by individual models of interests. The pull-advertising system is boot strapped into place by applying the concepts of the .vir subnet to Virtual Taos. Once Virtual Taos begins to be understood, the exact same architecture can be used to distribute music video, computer based game environments and digital movies.

The Anticipatory Web

The second school core principles establish a means to produce information spaces referred to as an anticipatory web of information. An anticipatory web develops when the meanings of real-time transactions are grounded in a theory of emerging category and understood, or interpreted, by one or more individual humans. Real knowledge of real structure of a real transaction space is required, and when this type of information structure is available, one anticipates future states.

The interpretation of information can be at the intuitive level or can be made explicit and subject to verification. In certain ways, the development of a specific anticipatory web of information is similar to the development of modern mathematics and physics. There are differences, of course. One of the differences has to do with the object of investigation, with anticipatory webs often being about events in commodity transaction spaces or in the social discourse.

The Anticipatory Web is not merely a buzzword, but a real possibility. To understand the science and technology that is grounding this possibility we depend on validation methods. These methods have been used in mainstream science, particularly in the field that was most influential on my work in systems theory and quantum cognitive neuroscience. We also use extensions of mathematics into category theory and the use of specific simplifications to computer science. The simplifications include a generalization of the hash table, a data management system similar to a relational database, and the use of Topic Maps.

Nature of Abstraction

The key difference between first and second schools is due to respective positions about the nature of abstraction. [17] Our theory of emerging categories follows precisely a specific theory about of the formation and use of natural language in common setting. Natural language use involves human induction of symbol systems, and the present real-time awareness of categorical structure specific to experience. We discuss issues of category formation and human induction in a private literature.

Along with differences, there are similarities. The Anticipatory Web is similar to what is commonly called the Semantic Web by the first school of information science. The Semantic Web community has difficulty in over coming what should be simple problems such as data interoperability.

Data non-interoperability is completely solved by using a technology invented by Bjorn Gruenwald in the late 1990s, and generalized by several parties. This technology simply treats all string information as if the string is an integer with individual letters being regarded as the digits to a base 64 numbering system. Once this technology is adopted, all information can be related in various ways using operations defined in Hilbert space mathematics.

Data interoperable is essentially caused by proprietary interests and due to an absence of understanding about optimal representational formats, such as the Gruenwald format. Once data interoperability is no longer an intractable problem, the world of computationally based ontological modeling opens up.

Our goal is to establish a deployed technology by building the infrastructure for a global marketing system based on pull type advertising and digital property management. As will be discussed, the pull of information to an individual can be accomplished through an ontological model of the individual viewpoint. Data interoperability allows what is built on top of the .vir subnet to work far more completely.

It is conjectured that if advertising, ie information about products for sale, is archived in a repository and pulled by these models into ad slots, the current market may be transformed. [18] The models can encode consumer information as well as service processes for validating product claims.

It is also conjectured that giving control over the nature of one’s own model has several positive benefits. Control sets up a positive re-enforcement mechanism that should in most cases provide a rapid evolution of the value that individuals see in information contained in pull advertising. In many cases, one may see the collective will of individuals controlling markets directly in ways envisioned by demand side economic theory. What we need is a proving ground.

Appreciation, Influence, Control

We are now, October 2006, in the late stages of a process that acquires feedback and ideas from community members. The development of Virtual Taos, the concept, has been allowed us to proceed along a path using the AIC (Appreciation, Influence Control) process development methodology. [19] Because this process has become mature, it is now possible to use the second school technology in the design of an e-market where new principles related to entertainment and creativity can be demonstrated.

Bill Smith, who is now at the School of Engineering Management at George Washington University, described AIC methodology in the following way:

Appreciation, Influence and Control are the terms used to

describe the three universal power relationships found in any system.

Appreciation describes the kind of power

most characteristic of our relationship to the "whole" system. Influence

describes our relationship to parts of the whole system

which we

do not control. Control describes the

relationship of

the individual part of the system to itself.

The primary feature of the AIC approach is

that it allows

organizers to identify, call upon and use all the power available to

them to

achieve their purposes. By personality, organizational function or

culture we

tend to overuse one of these power relationships and under-value the

others.

Bill Smith, 2002

In Bill's theory, a rather long period has to pass where only the appreciation of others is persisted. No effort at control, and no scheming to create lines of influence - at least for an initial period. What is then defined is common to many, rather than specific to one.

As of September 2006 in Taos, this appreciative field is only partially formed. The Town of Taos is stuck in a status quo that, like many other places around the world, is controlled mostly by random events and the self interest of a few. This status quo has created the potential for a special e-market based on advanced principles.

Consistent with second school core principles, we see value as having both a distributed and a localized nature. These issues are historic in nature. The industrial age created commodity markets where localizable value was harvested often without considering the distributed value of the commodity. Our perspective is that over a period of two centuries, harvesting the natural resources has created imbalances that are now addressed by second school science. Our economic system and science now has an opportunity address the imbalances that have accumulated over the past centuries.

The worldwide advantages of second school science are two fold. The focus of economic activity creates support from the accumulation of wealth by the few to the healing of environmental and social imbalances. Igniting the second manufacturing revolution is the second advantage. This revolution is initiated when distributed processes begin to provide services that seek global values. The process needs two things, timely accurate information and commodity transport.

So, in theory, the two missions are being brought together as a single process that establishes economic value to many people. The integration of the two missions also enables the transformation of purely economic processes to spiritual/structural processes having an economic foundation.

The scale of integration is multi-level, having very small impacts on some individuals without making large changes in the social world; as well as having impacts that may in fact impact how history is revealing itself.

On the Nature of the First School and Individual Liberty

In summary of

the Chapters 1 – 5: Our economic system is not Nash like. [20] Individuals working within very powerful

corporate entities have capitalized on the movement towards

service-oriented

standardization. This behavior is not

necessarily natural, but has been re-enforced by the economic system

that has come,

over the process of several centuries, to be supported by narrow legal

and

moral assertions. The development of

individual-to-individual (I2I) commodity and digital property web based

services allows a shift in the utility function thought to govern

global

behavior re-enforced or inhibited by underlying processes.

Modern natural science has gotten a handle

on how these utility functions work.

The current push-advertising based economic processes have a

great deal

of waste and re-enforces greed based behavior.

The proposed .vir Internet subnet standards

create a

separation of the push advertising mechanisms from the pull of

information to

individuals based on the individual development of computational

ontological

models of his or her interests. This

separation causes a shift in the economic utility function. The .vir standards also

create a separation between individual ontological models, which must

be kept

private unless there is a separate court order, and global models of

social

discourse, commodity transactions around the world and consumer

purchasing

trends.

The capitalization of the service-oriented concepts follows similar process that converted neural networks, genetic algorithms, artificial intelligence, business process re-engineering, knowledge management, and the like. The conversion takes challenging innovation [21] and dilutes its potential impact, and eventually seeks to discredit fundamental advances that would upset the status quo.

What is interesting is the paradox, which the second school refers to as the "origin of control" paradox. [22] Many corporate IT firms redefine service-oriented architecture to mean the provision of service to lines of business.

The current systems

cannot see the emergence

of new event structure. This systemic

failure is because there is no real measurement process, no firm

grounding for

the induction of meaning to the symbols used by people and machines. In the current systems, the induction of new

social symbols is not natural, because the information produced is not

grounded

in a measurement.

By shifting the control

of the design of

information structure to all individuals, or most individuals, when

these

individuals are acting in a natural way; we create an induction process

that

really does see what consumers in the markets want. The

production processes feeding into the markets can then be

based on what consumers want rather than what the producers want to

sell.

The transparency

develops under the complete

control of those individuals who are allowing the measurement. For anyone not allowing the measurement,

there is simply no input of information from those individuals.

The protection, by law and judicial review, of individual liberty is essential if enough individuals are to trust the .vir subnet. This protection has to be designed into the technology of the .vir subnet and acted on by judicial review.

The second school instrumentation allows real-time measurement of the collective social responsiveness. Measurement is converted into tokens that amplify that responsiveness. The measurement is at two levels. The first is individual and localized to an individual environment, with transparency to the individual on what is modeled locally and what is transmitted into the environment. It is likely that an intermediate environment is to be used based on the notion of a trust circle. Then from this trust circle there is an abstraction process where collective intelligence is produced during an induction of symbol systems.

Again, this process must be transparent to all individuals from which raw information is measured. This raw information is then abstracted into a global model of the market demands, as well as the thematic structure of collective responses. More is available on this technology in two papers by Prueitt. [23]

How systems express intelligence is a subject of some interest in many of the disjointed disciplines, including disciplines that support business thinking. The application of principles from the study of system intelligence to control mechanisms exercised over market production has to be carefully structured. One key concern is the security of individual liberty. This basis of this concern is changed when one moves from first school to second school thinking.

Second school recognizes that human behavior is both easier to understand and has degrees of freedom that the first school does not see. We see that the individual is both exactly the same as any other individual, for example under the eyes of the courts. However, we also see that each individual is absolutely unique. The unique expression of individuals is the basis for our laws regarding individual liberties. If the unique expression of an individual is modeled with ontological models of human behavior, then we are in danger of providing the system with the means to see and restrict behavior.

The stratification of modeling produces both the means to protect individual liberty and the fidelity of modeling required to deliver the value that will come from modeling behavior. The individual models are keep local as secure digital objects, with the interface that provides specific information to the global system. The flow of information through the interface is made transparent to the individual.

The nature of Virtual Taos will serve as a template for the development of a new market in commodity acquisitions. This template works on different principles. An example is give. As has occurred many times during the past nine months, I was working on the computer in the room off from the side of the main lobby of the Indian Hills Inn, and an artist came through the door.

The artist, Chris,

was drawing single trees with the notion

that trees need to be seen, and perhaps heard from. Her

concern was about the health of trees and about her desire to

use her spare time to represent these trees and to allow the images to

be

produced as giclee extensions [24]

and perhaps sold at environmental conferences.

The concept that Virtual Taos has developed regarding the marketing of giclee extensions for the Pruitt images [25] seemed to fit perfectly for what Chris was trying to do. The basic concept is presented as a personal referral marketing system, where by if an individual owns a virtualTaos Portfolio then that person can sell the images through the Internet and make a percentage, 20% - 40%, of the retail price.

The distribution of artist’s images by individuals is a reflection of the principles underlying our anticipated shift in individual control over private economic processes. Taos could be the place, one of them, from which a kind of wisdom might grow. Virtual Taos could be a shining example of how to transform individual behavior in support of a new economic reality based on pull information and clarity of consequences.

Taos could be an example of local transformation from an entirely dysfunctional culture - but charming in specific ways - to a highly productive part of peer-to-peer service system. First, Virtual Taos could be the demonstration site for the new technology based on digital property rights management, and content management.

This means the development of new communities here in Taos valley and in surrounding towns.... We are considering using a virtual system similar to the new MUD (multiple user domain) at www.secondlife.com. The second school’s technology will shift the focus away from these large corporations back to the individual entrepreneur whose living depends on the transport of commodity and the human knowledge sharing enabled by ontological models.