Chapter 4

Grounding The Tri-level Architecture in

Neuropsychology and Open Logic

Revised February 2003, May 2004

Section

2: The Houk/Barto model of the cerebellum

Section 3: Model of Information Metabolism

Section 4: Advent of Anticipatory Technology

Section 5: Ecological processes

Section

6: Application of stratified theory to

information production

Section 7: Generalizing

a model of human memory and anticipation

Section 1: Introduction

Mathematical models

about the principles of theoretical biology were developed in the 1950s and

1960s (see Levine, 1991, for history).

These models involved differential equations, switching nets and

category theory. The formal work by

Rashensky, Rosen and Grossberg helped to establish this foundation to modern

connectionism. The resulting field of

study is quite a large one.

In stratified

theory we have introduced a new set of foundational principles, some in direct

opposition to the principles of connectionism and some that are built on a

solid heritage shared with connectionism.

Many of the foundational principles for connectionism are

controversial. The notion that information

can be distributed is perhaps the primary point at issue. The strong form of the field called

artificial intelligence, or simply “AI”, has long taken great pains to

discredit the notion that information can be anything but explicit. The historical context and motivations for

the long lasting conflict between AI and connectionism has to be addressed

carefully and will not be attempted here.

Stratified theory

takes one additional step, beyond connectionism, that is even more

controversial. In stratified theory

information can be discrete or distributed, but can also be present in many

organizational levels. These levels of

organization can even be nested so that two systems interacting with each other

may have entirely different substructure.

The stratification is thus “relative” to location. Moreover, the stratification itself sets up

a theory of emergence, as we will see in this chapter.

I have been

somewhat involved in primary work on the nature of neural and immune systems,

both while a graduate student, in mathematics, and later in my professional

life. I was involved in and published

detailed work on specific neural circuits.

These studies revealed certain general systems properties including: 1)

associative learning, 2) lateral inhibition, 3) opponent processing, 4) intra

anatomical neuromodulation, and 5) cross-level resonance (Levine, Parks &

Prueitt, 1993; Prueitt, 1994).

In 1995 my work was

to be influenced by

1)

Meeting

several of the leading Russian logicians in the field of control theory and

semiotics

2)

A

growing awareness that mathematics, as we known it today, has NOT been

constructed to model the cross organizational scale phenomenon that clearly is

involved in maintaining autopoiesis (Maturana and Velara, 1989) for a living

system

3)

A

growing awareness that information technology was hopelessly entangled with the

strong form of artificial intelligence and scientific reductionism.

Each of these three

influences has had a profound impact on the development of stratified theory,

and the increasing significance of anticipatory technology.

While late

twentieth century advances have proved significant, it is argued by some that

the attractiveness of, the hope for, a single theory of brain function, perhaps

based on the computer metaphor, was to mislead basic research in cognitive

science. The confusion created by the

science fiction aspects of artificial intelligence was further distorting the

process of investigation. Many shared this

viewpoint, but it was unclear what could be done.

For example, in

1995, Lynn Nadel made a point to frame the current interest, in how learning

and memory is organized, as a counter intellectual current to long held

dominate scientific positions that, incorrectly, hold there is but one general

long-term memory system (Nadel, 1995). In his analysis, analysis shared by

others in (Schacter & Tulving, 1994) the fixation of memory theorists on

the possibility of a single model can be traced to, the absence of, operational

definitions of what is a "system".

Nadel's conclusions

was that multiple memory systems exist; memory is involved in all or most of

neural processes; and that experimental methods have identified the functional

and architectural characteristics of distinct types of learning and memory.

General systems

properties have also motivated the field of artificial neural networks and the

related fields of computational control theory. The theory of embedding fields,

Stephen Grossberg's original formulation of a methodology for modeling neuronal

networks, envisioned the development of a body of computational algorithms that

described the neuropsychology, the neurochemistry, as well as other

experimental evidence regarding the mechanisms involved in human perceptual and

emotive actions (Grossberg, 1972a, 1972b).

Theories of field

dynamics and ecological psychology have been used to extend and overcome some

of the limitations of early connectionist methods. Central to these limitations are the various forms of a

"vector representation hypothesis" that assume vector space mathematics

to be sufficient for modeling biological activities. Ecological psychology in particular offers some new language

that helps to separate the concept of a system and the concept of a system’s

environment. Once one has this

language, then one can more easily talk about multiple ontologies and the

grounding of a specific ontology within a context, e.g, as having a “pragmatic

axis”.

However, no general

theory of micro to macro transformations of information has yet developed that

fully captures the essential notions of emergent computation and cross

organizational scale entanglement.

In 2003, bits and

pieces of a general theory did exist, though scattered in different

disciplines. The notion of a national project began to be discussed. This project would recognize that the

limiting constraint on developing what is now called the Anticipatory Web was

the educational background common to those who might develop such a new reality

and use its capabilities. We concluded

that a general theory could not be developed without some sort of counter

balance to popular theories that we felt are at least partially based in a

specific type of confusion.

As discussed in

previous chapters, notions of cross scale entanglement exist in the historical

literature of quantum mechanics. But the notion of cross scale entanglement

existed almost nowhere else. In

linguistics, one could talk about double articulating and thus introduce a

parallel between quantum mechanical entanglements and the issue of

ambiguation/disambiguation in linguistics (Lyons, 1968). In the various schools of ecological

psychology, behavioral cycles are described as having a supporting substructure

and having an open environment in which cyclic behavior is expressed.

Furthermore, behavioral cycles are seen to have distinct modes and to be

emergent from an interaction between environment, self and substructure.

Action/perception cycles are seen in ecological physics and psychology.

Partition/organize cycles are seen in models of molecular computation (Kugler

et al, 1990). But these fields of study

where not integrated and no process for integration had been contemplated,

before the planning began for a national project.

Prueitt’s two

conferences at Georgetown University (1991-1992) and Pribram’s series of

conferences (1992-1996) at Radford University set the stage for planning the

national project on knowledge sciences.

The precise notion of cross scale entanglement arises from the logic of

quantum mechanics and has given rise to various "quantum metaphors"

linking consciousness and awareness (Pribram, 1991; 1993; 1994; King &

Pribram, 1995; Pribram & King, 1996). The cross scale entanglement issues,

as well as empirical evidence on the formation of compartments and the

stratification of matter and energy in time, lead us to restate many

fundamental questions about the relationships between psychological objects of

investigation and physiological objects of investigation. The national project was conceived based on

the difficulty one finds in talking about these questions, even within

scholarly communities.

As important a

principle as cross scale entanglement is, we begin to realize that the biology

of physiological phenomenon requires a full theory of stratification. This realization lead us to define a

tri-level architecture for the machine side of an Anticipatory Web (of

information). Those computational

constructions that encode the categories of invariance over a set of measured

data were to be encoded at one level of organization. Anticipatory templates evolved under a different set of

processes. In real time, the two

“levels” entangle in respond to specific input from the “present moment”. This evocative input draws into an Orb

construction some, and not all, of the elements from the measured data

invariance. The human side of the

Anticipatory Web creates an interpretation of the informational structures.

Our work in 2002

and 2003 developed a standard format and encoding structure both for the set of

all categories of data invariances and for a relational operator that defined a

local relational property between pairs of these categories. The result was the first Ontology

referential bases (Orbs) developed by Nathan Einwechter and myself. The 2003 notational paper for Orbs is

provided in Appendix B.

We began to see

that a new paradigm described technology architecture for text

understanding. From this new paradigm one

should be able to create a general class of computation aids to human reasoning

and information gathering. By

anticipating the economic power of new a class of technologies we hoped to

provide some mechanism for the exploitation of this technology. Here at last, we argued was a value

proposition that would allow stratified theory to take center stage.

A specific

application of this work to defense intelligence was developed and

proposed. Several possible applications

to bio-defense were addressed in new work that built on the foundations laid

out before 2002. However, we still

could not find funding. The

development of the first full Orb-based human-center information production

system could not be undertaken. In 2003

and 2004 our efforts constantly were limited by what program managers, and

advocates for things like the Semantic Web, could bring themselves to

understand.

We knew that it was

just a matter of time, and timing. It

was a matter of time before the natural principles of the Anticipatory Web were

more commonly understood and appreciated.

For our group, it was also a question of timing. Could we catch the wave and bring the BCNGroup Foundation into an active role? The Charter of the Behavioral Computational

Neuroscience Group envisioned the aggregation of new intellectual property in

the knowledge sciences as a means to simplify the literature and fund a the

development of a K-12 curriculum.

The proposed

architecture of text understanding had been grounded in neuropsychology, and in

the formalisms of open systems theory (Prueitt, 1996). The architecture made a

fundamental distinction between a computational and natural system (Prueitt,

1995; 1997). Understanding this distinction helped us to draw the line between

what computational systems may be expected to do and what role natural systems

must play in human computer interactions. This distinction leads to what we

called perceptual measurement. Our discussions

about perceptual measurement lead us to develop the nine-step actionable

intelligence process model in 2003, along with differential and formative

ontology. Web reference to this work

was made available.

The grounding of

the Tri-level architecture in neuropsychology has been an important part both

of our formulation of the principles of anticipatory technology. With this grounding completed we began

convincing policy leaders that the national project was absolutely essential if

the United States was to take the next step towards a more full realization of

participatory democracy.

Section 2: The Houk/Barto model of the cerebellum

During the 1980's,

the neurophysiology and neurochemistry of the cerebellum and motor expression

circuits gave rise to a model of the cerebellum as an array of adjustable

pattern generators (Houk, 1987; 1989; Houk & Barto, 1991). A great deal of detail about the physical

processes involved was known already in the late 1980s and computational models

enriched this science during the 1990s.

Other examples exist in research literatures that focus on computational

models of the hippocampus, the prefrontal cortex, the visual cortex, etc.

The Houk/Barto

model of neuronal connectivity was used as one example, among others, to

illustrate foundational principles in the context of modeling a physical

single-level processes conjectured to be involved in composing, controlling and

expressing motor programs.

Holonomic brain

theory (Pribram 1971; 1991) describes a different type of model. The difference is, simply stated, huge. Pribram stressed the difference between

localized processes and process that were simply not localized or even

localizable.

Gravity is an

example of a holonomic constraint. It

is involved in local dynamics, and yet its nature is only slightly affected by

the local boundary conditions of things that must move around in a field

expression of this constraint. The

anticipatory technologies allows the abstract representation of a holonomic

constraint to be separated from the things that have initial conditions, due to

emergence, and boundary conditions related to location. The physical correspondent to the

abstraction of the middle layer is a set of physical objects that exists only

in a present moment.

How does the brain

“see” these objects? Due to the

processing of light from the object, a distributed wave propagation of micro

signals from the neuron’s receptive field move along dendrite pathways towards

the cell soma.

Each

micro-dendritic event, emanating from groups of synapses produces a localized

Fourier transform (using Pribram’s language), converts wave potential into

discrete event potential in the form of pulses. These pulses use channel properties of dendrites to move the

signal towards the neuronal cell body. This wave of dendritic signals is

accumulated as potential energy on the soma side of the axon hillock.

In my

interpretation of the theory, at the soma an inverse Fourier transform is

generated from the conversion of discrete sequences of micro-pulses into a

single semi-coherent electromagnetic field. The inverse Fourier transform is

computed as micro electromagnetic events, initiated in dendritic synapses, are

accumulated on the soma side of the axon hillock.

As the

electromagnetic imbalance is strengthened, phase coherence separates out

various parts of the spectrum, resulting in yet another localization and

discretization into a Fourier type representation. The potential energy is

drawn from the soma into a substrate composed of protein conformational

dynamics. Here chemical valances move the potential energy's location, across

the hillock where new field coherence is established.

Field coherence is

modeled as a new inverse transform. It is followed by the emergence of a forward

transform that provides the weights, or more generally the initial conditions,

to elemental pulse generators that produce electromagnetic spikes propagating

down the axon. Each forward transform selects a, perhaps quite different,

basis. Each inverse transform is involved in establishing the structural

mechanics of memory stores and in allowing these memory stores to influence the

new forward transforms as they emerge in propagated waves of neural activation.

Of course the exact

class of transforms, best suited to model neural phenomenon, is the subject of

a deeper discussion (MacLennon, 1994). The forward and inverse Fourier

transform is a first approximation.

The Fourier

transform takes an electromagnetic signal and re-expresses this signal as a sum

of amplitude and phase modulated waves expressed mathematically as regular sine

and cosine functions (of time). The sine/cosine basis is used under the

assumption that the phenomenon has strictly periodic generators. The inverse

transform takes these summed representations and loses the representation that

relies on a specific set of basis functions. Moreover, the notion of a forward

and inverse transform is an artifact of mathematical formalism, since an

electromagnetic wave can be seen in a regularized form, or not, without

effecting its ontic status.

The transform can;

however, be seen as movement between two temporally defined scales of dynamical

activity. These scales are marked by the development of regular patterns of

emergence into one scale from the other.

A neurowave propagation is then composed of a series of many (thousands)

of transform/inverse-transform pairs.

(Look ahead to Figure 6) Each

forward transform selects a representational basis, and this basis is built

depending on what is available locally to create a physical basis for the

basis. Each inverse transform encodes

information into the new “location” and allows the local ontology to re-express

the information.

For sometime, a

structural order has been observed in the organization of the cerebellum

Brodal, 1981; Ito, 1984; Gibson et al, 1985). Furthermore, experimental data,

reviewed in (Houk et al, 1990), suggests that movement signals recorded in the

magnocellular red nucleus, located outside the cerebellum, are produced by a pattern

generator located in the cerebellum. These signals are recorded by

microelectrode. The stratified theory

suggests that memory encoding and memory uptake is made via spreading

activation waves where only part of what is occurring is observed as the activation

wave. There are some deep issues here

related to how informational invariance can be preserved even if at each of a

thousand iterative cycles the basis for representing this information is

changed.

A note is in order

on the future implementation of principles being discussed here in the form of

a computer-based Human-centric Information Production (HIP) system. The voting

procedure, in Appendix A, is a simple version of quasi-axiomatic theory (see

Chapter 6). Either the voting procedure

or a more full implementation of quasi-axiomatic theory works within the

tri-level architecture. Before 2002

these implementations were not as yet a system of computer programs. After 2004, our task was to build the

conceptual foundation for stratification theory from the neuroscience and

physics. The modeling being expressed here (Figure 1) was an important first

step.

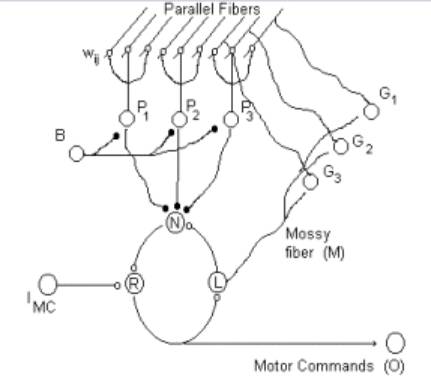

Figure 1: Modified from Houk et al 1989.

The passage

“across” the axion hillock is non-Newtonian in that several organizational

levels of stratified processes are involved.

For this reason, and for others related to the description of actual

perceptual measurement, our interpretation of the neural processes at the axion

hillock creates an illustrative model of the cross scale phenomenon, more

broadly considered.

The experimental

data suggested that electromagnetic potential energy is broken down into a set

of basic elemental processes that activate substrate "computation"

based on structure activity relationships (Prueitt, 1995, Chapter 1; Zebezhailo

et al, 1995). Following this molecular computation, the state of the substrate

developed a new representation within a derived set of basic elemental

processes. This new representation is in

the language of the metabolic environment present at the time, and location, of

the specific computation. The change in

basis is a natural consequence of the movement form one environment to another

environment.

The loci of

activity may have moved due to transport properties associated with cell

structure, again as a consequence of molecular computation. In this new

location, an emergent electromagnetic field is formed by assembling a subset of

the basic elemental processes according to situational influences at that

locus.

For the Houk/Barto

model of the cerebellum, this model works as follows: A recurrent (reentrant)

feedback pathway composes a motor program from basic elements consisting of

patterns in neuronal bursting. Composition is controlled by activated

combinatorial maps in the motor cortex. Purkinje cells { Pi }

innervate their dendrite receptive field into a regular array of parallel

fibers from cerebellum sub-cortical cells. Synaptic connections { wi,j

} encode associative strengths. Basket cells { Pi } under the

influence of the motor cortex, in the cerebral cortex, inhibit a selection of

Purkinje cells and allow the uninhibited Purkinje cells to sample the array of

parallel fibers for components of motor expressions that are consistent with

combinatorial maps that are active in the cerebral cortex. These Purkinje cells

then read out a signal, expressed via its axons, into the receptive field of a

group of subcortical cerebellar cells { N } that form a deep cerebellar

nucleus. This cerebellar nucleus has reciprocal connections and common

dendritic innervations with populations of cells, arranged in columns, located

in the cerebral motor cortex (not shown in Figure 1). The cerebellar nucleus

has excitatory connections to the red nucleus { R } which are located in the

mid brain outside the cerebellum. Environmental impulses {IMC} are

received by the red nucleus and these shape the motor commands which are then

projected to an interneuron and to muscle fiber.

The shaping of the

program's expression by a sensory stimulus is conjectured in Gibson et al,

(1985) to involve a two stage process whereby the movement signals, recorded at

the red nucleus by micro-electrode, are the expression of response patterns

composed to meet the demands of motor commands. Continuous feedback from the

environment then modifies the expression. A signal is also sent from the red

nucleus to the inferior olive (again outside the cerebellum). The inferior

olive receives reinforcement stimulation from various sources and projects

reinforcement signals to modify synaptic weights { wi,j }.

Section 3: Model of Information Metabolism

In the previous

section, a network model of one "subsystem" of the brain is

described. The purpose of this description is to advocate a specific context

for using the stratified paradigm to address a central open question regarding

the ‘discontinuity" of the stimulus pattern from one location to another.

This discontinuity was first noticed by Walter Freeman in his study of the

olfactory system of rabbits (Freeman, 1995a) and then later in other neuronal

subsystems (Freeman, 1995b). It was noticed that the stimulus patterns

generated by olfactory bulbs were lost during the transfer of the signal from

receptor cells to interneurons in route to the olfactory cortex.

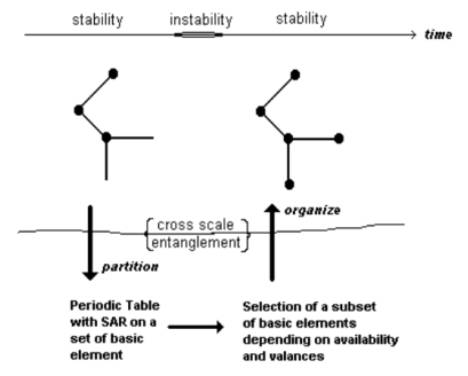

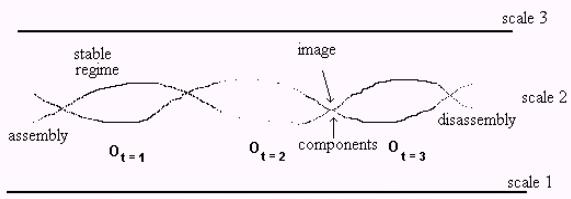

Figure 2: Special episodic sequence where an organized whole

briefly dissipates into a substrate and then reorganizes

The model for cross

scale phenomenon allows us to make specific speculations. The specific discontinuity of a signal

pattern might be a generic property of a transfer function that samples a

specific part of the electromagnetic spectrum from dentro-dentritic field

interaction and encodes information from this spectrum into structural activity

relationship (SAR) information at the level of protein conformational states

(see Figure 2). This encoding might be seen as a Fourier decomposition of the

potential energy into a recipe that is then propagated via a pulse wavefront

along dendritic channels to the axon hillock. It is conceivable that at the

axon hillock the pulses, and the encoded information, is placed into some type

of micro-environment where a process of reorganization may occur.

The elements of the

sequence are initiated and terminated by cross scale events.

Using this model of

cross scale phenomenon, a viewpoint is supported that internal and persistent

cycles of emergence and dissipation is a fundamental property of open complex

systems. The viewpoint is then applied to various investigations, including the

development of computational architecture directed at managing and manipulating

machine representations (Orbs) for the purpose to assisting human production of

new real time information.

Natural language

understanding by humans depends on the emergence of mental events from which we

derive, somehow, an understanding about our environment and ourselves. The relevant science literatures suggest

that human understanding is based on the assembly of components from implicit

memories, which we represent as statistical-type artifacts, in a context that

cannot be fully captured in statistical notation. The development of the categorical abstractions that are encoded

as Orb constructions allows a simpler and more powerful means to represent the

atoms of memory and the potential relationships that might be established in a

real context.

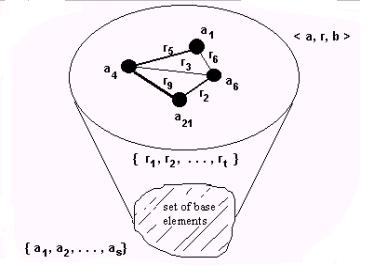

The Orb

constructions are merely sets of ordered triples in the form < a,

r, b > where a and b

are locations within a graph and r is the relational label of the

connections between a and b (see Appendix B). The stratification theory has a very simple

computer data encoding format, and thus what the theory has in complexity the

software should have in simplicity.

Unlike Resource

Description Framework (RDF) type ontology encoding, the Orb constructions do

not make a final imposition of semantics outside of a specific situation. A

theory about situation type may be encoded in the Orb constructions, as is true

with RDF constructions. However, the

final imposition of ontological claims is withheld until a reifying process

that usually involves a human in the loop and which can take into account the

possible novelty within the specific situation. Reification implies both iterated cycles and human

introspection.

Natural scientists

have noted that the experience of context is found in action perception cycles

with an environment. Thus an increase

in the use of action-perception cycles would seem to overcome some of the limitations

imposed through the use of statistical knowledge. A dependency on “known”

substructural atoms by the ecological affordance (Gibson, 1956; Shaw, 1999)

forces regularity in substructural type and thus statistical methods do work in

creating measurement processes. These

statistical artifacts can then impose the final constraint on any emerging

pattern being expressed by the Orb elements.

Direct perceptions of both substructural type and ecological affordance

bind the formation of structure into an expression of function.

Of course, this

means that the autonomous understanding of targets of investigation, by

computational systems, should likewise depend critically on the cyclic

extraction of features from "experience". Within each cycle, new categorical invariance can be identified

and local measures of co-occurrence indicated by adjusting the set of syntactic

units in the set of Orb constructions, e.g. in the set of the form < a,

r, b >.

We argue that logio-linguistic principles can be used to encode structural

characteristics of salient features within human language, and that small

subsets of these features will evoke human mental event formation in much the

same way as in normal human communication.

In operationalizing a principled theory regarding the use of Orbs as

cognitive priming, we developed a simple form of category theory and the Orb

encoding. The resulting work produced

machine-readable ontology and first order logics that bind knowledge

representation together. This then is

the anticipatory technology.

Human perception is

the key to interpretation of anticipatory ontology. Without human in the loop activity, the machine ontology will not

reflect that part of the “now” that makes that “now” unique. The RDF standards depend on a precise

encoding of a theory of the world that is then recalled to stand in for human

knowledge. RDF is formally closed at

run time, whereas Orbs are formally open at run time.

Our

logio-linguistic principles reflect general systems properties because the underlying

morphology of concept formation has been observed by natural science to conform

to a class of general systems properties, including emergence. These properties can be seen in physical

systems, particularly if one examines certain viewpoints about quantum field

theory. As the final aspects of first

generation anticipatory technology was put into place (2002 – 2004) we

understood that Anticipatory Web concepts would eventually replace Semantic Web

concepts. The Anticipatory Web concepts

are ultimately easier to understand and have a greater fidelity to how the

brain system works. The anticipatory technology is easier to encode and

manipulate in the computer. We reasoned

that the anticipatory technology would be discovered to be cheaper, faster and

better than semantic technology.

Section 4: The Advent of Anticipatory

Technology

The transformation

of information technology by Semantic Web efforts failed to achieve what was

expected. This failure was due to a mismatch

between complex natural reality and formal expressions of logic acting on

static encoding of taxonomical information.

The marginal successes occurred in cases where standard RDF ontologies

were about the data formats and data exchange issues (John Sowa, personal

conversation). Some degree of interoperability between computer programs was

achieved at a greater expense than was necessary. The confusion over the relationship between semantics and

pragmatics made real progress on differential and formative ontology very

difficult.

It was clear to

BCNGroup Founders that real-time psychological and social event structure would

be understood only if one sees events as having emergent structure. But it was also clear to us that a new

mathematics and a new computer science curriculum will soon be developed,

reflecting the principles of Human-centric Information Production (HIP) and

stratified theory. These clear

perceptions lead us in 2003 to start planning for a National Project to

establish the knowledge sciences as an academic discipline.

The limitation of

formal theory in the context of modeling complex systems was the critical

scientific result that leads to anticipatory technology.

Linguistic

principles are not described well by classical physics, nor classical logic or

set theory. The use of mathematics can

lead to a specific type of confusion.

Hilbert mathematics, for example, is often used to capture so-called “latent”

relationship due to linguistic variation seen in the distribution of words in

text. But these methods have only one

notion of nearness, the notion derived from the induction of the counting

numbers (for more on induction see; Goldfarb, 1992). Having see this issue sense the early 1990s, the BCNGroup

Founders began to make the claim that new logical principles, and notions of

membership, are required to design and implement a new generation of machine

based autonomous intelligence.

By 2004 we began to

demonstrate operational information production systems of the type we envisioned

in the mid 1990s. Three key concepts

centered around (1) organizational stratification, (2) the formation of

categories of occurrences within each level of organization and (3) cross scale

entanglements involved in emergence of events.

Events at one level were due to the aggregation of categorical defined

atoms at a faster level of organization.

A top down constraint on the emergence process is then instrumental in

placing the event within its organizational level. Computationally, the Orb sets are sampled by anticipatory

templates and a situational ontology is expressed as a phenotypic graph that

when observed primes the human cognitive process and results in a mutual-induction

involving the deductive capabilities of the computer and the inductive

capabilities of the human.

We began to use the notion of induction is a

specific way, and contrasted “induction” with “deduction”.

We

suggested that deduction is not as natural to humans as our academic

traditions indicate. Historically,

rationality and formal logics has been represented as the ideal for human

thought, not only in some theoretical sense but also in the sense that a

perfect human being has been portrayed as being perfectly rational. Rationality is defined in terms of consistency

and completeness, and is best reflected in David Hilbert’s grand vision

about the completion of mathematics.

Hilbert’s grand vision still governs a great deal of the work in science

and mathematics. However, Godel’s work

on the foundations of mathematics and related literatures demonstrate the

limitations.

Two electrical motors will induct changes in state. The coupling between physical electrical

systems is due to “holonomic” constraints related to an electromagnetic field.

Holonomic effects are not locally concentrated as described in Newtonian

action-reaction systems. The induction

occurs non-locally, and thus the mechanism “causing” the state changes is not

accounted within a discrete model.

However, the state changes do occur and can be modeled using continuum

field models. The mathematics is

elegant but is often beyond the reader’s experience. Chapter One has a longer discussion on the differences between

holonomic and non-holonomic causes.

In the same way as two physical electrical motors induce state modifications in each other via a non-local interaction; we observe that symbol systems such as natural language or gestures will cause modification in the mental state of a human being. Because of the confusion about the nature of a computer program, we use the term “mutual-induction” to talk about and action-perception cycle that involves in each cycle the two mutually exclusive systems:

1)

the computer program with some type of display or

informational interface

2)

the

acting and perceiving human living in real time and experiencing, among other

things, the information being computed by the computer.

The

human can be involved in creating computer input as a result of the responses

that the human has to old information states.

These human inputs can result in new informational states in the

computer.

Likewise,

the computer can be computing informational states in the computer. Upon viewing an informational state, the

human’s mental state is altered, or manipulated via cognitive priming, so that

human cognitive acuity and tacit knowledge is primed by the computer

informational state.

In

the anticipatory HIP architecture, the human action-perception cycle is

influenced by the series of states generated by the computer.

Figure 3: The anticipatory loop

Why is this radically different from the current uses of

a computer? Of course, humans are

always involved in these action-perception cycles. So the primary difference is in the architecture of the

computer’s data encoding and repository for production rules. The tri-level architecture matches the

biological facts, as understood by many leading cognitive scientists, and

separates the invariance of structure from the anticipation of function in

environmental context in real time.

From our study of

natural science, we know that neural systems derive implicit structure and

encode structural representation of this structure into physical substrates of

the brain (Schacter & Tulving, 1995). This means that the consequences of

experience includes modifications of metabolic processes, and these in turn may

have direct consequences at the faster time scales where metabolic and quantum

events are organized. We observe that

the properties of emergence propagate from the faster time scales into slower

time scales and are then participatory to the mental events themselves.

Through processes

of adaptation, in the many levels of self organization within the complex, the

substrate expresses coherent and emergent neural (and electro-chemical)

phenomena that have correspondence between the structural invariants of the

world experienced and internal mental event that represent this experience to

the higher order processing centers of the brain (Pribram, 1971; 1991; Levine

et al, 1993; Prueitt, 1997).

These phenomena of

emergence go to the issue of how knowledge can be extracted from a data

source. We follow a logic-linguistic

model that assumes both that knowledge acquisition and the object under

investigation is complex. Knowledge and

the natural world is complex.

In written text the

extraction of knowledge representation depends on finding correlations over

time and developing a flexible mapping between internal representations and

external objects and agents (Michalski, 1994). The computational system must

have an "understanding" of how objects are composed from a substrate

and how they function over time. This understanding can be, at least partially,

"engineered" into software and hardware. But in doing so, the engineering should have some of the same

properties of openness as do natural systems, and the overall system should

accommodate control by the environment during the periods of emergence.

Section 5: Ecological processes

The ecological

psychology community has developed a view that action-perception cycles are

emergent from the physical properties of subsystems and are driven by a class

of periodic forcing functions. The scholarly research in ecological psychology

and ecological physics views periodic forcing is an essential part of the

temporal stratification of biological organization into levels.

In this view, any

one level of organization contains objects that are emergent constructions from

a relatively stable substrate of basic elements. The periodicity of formation and collapse is simple local

attempts to balance conservation laws.

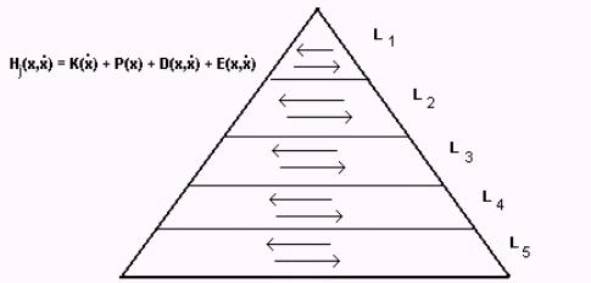

Figure 4: Natural systems stratify

It is clear from

the literature that critical issues must be resolved in any situational

analysis of natural systems.

1)

organizational

stratification,

2)

the

formation of categories of occurrences within each level of organization

and

3)

cross

scale entanglements involved in emergence of events

Categories of

occurrences and the relationships they develop define the levels as shown in

Figure 4. Each level, Li ,

has the capability of forming a substrate from which new organizational wholes

may emerge. Each of these levels, and their interaction, can be partially

simulated by a thermodynamic model. The stratification can then be nested and

thus the Figure can be misleading.

Natural systems are

"open" systems with varying kinds of openness. For example, enzymes

produce microenvironments where constraints are placed on the

"meaning" of assembled biochemical agents. In the hippocampus micro environments fuse the associational

traces of implicit memory into compartmental invariance that are

"judged" by the processes of other limbic systems and of cortical

regions. These particular microenvironments are agile semi-closed flexible and

capable of responding to novelty and nonstationarity in the environment.

Stratified theory

will allow science to organize experimental results in various areas of

investigation. These areas include work

on modeling social systems, biological systems, and environmental systems.

The stratified

thermodynamic model of open systems (Figure 5) has micro environments that

emerge through a specific coherent aggregation of compatible processes. The

average lifetime of processes at a certain level is an order of many magnitudes

different from the average lifetime of the processes at the next level. For

example, physical atoms form one level and chemicals form a different level.

Figure 5: Stratified thermodynamic model where one of the

levels has collapsed.

The collapse of one

of the microenvironments leads to the diffusion of material into an environment

that supports the reassemble of this material, and other similar material, into

new micro environments. As these new environments arise, the structure and

function reflect the characteristics of the material as well as the state of

the environment. The persistent of

function though many iteration of collapse and aggregation is maintained by

autopoiesis (Maturana and Verala 1989).

Even up to a now

(2004) it is not clear how to engineer open systems, but a great deal of the

work we have completed has not been applied to this problem. We expect to make significant progress

because the foundational principles of anticipatory technology have addressed

issues that are confused in the core concepts of semantic technology.

Open systems are

extending engineering and close loop control theory into a new class of logics

similar to quantum logic. These new

logics reflect several classes of open systems properties. The permutation of

systems into each other produces the features of assembly/disassembly processes

and thus creates the feedback loops that reify the set of Orb constructions and

the set of anticipatory templates.

The interface

between a human user and a computational system will provide a

"pragmatic" axis to situational analysis. Situational logics have formal means to describe how constituents

are assembled into operational wholes and how operational wholes are

disassembled into components. This

means that formal tools for describing assembly, degeneracy and indeterminacy

can be part of the computational logic as well as the machine - human

interface. The human cognitive ability, to step away from the formalism and

take a leap of faith, is provided a stream of high quality factual information

about event atoms and reminders of situational conditions.

Human experience of

knowledge involves an internal awareness of the structure of time, and we

humans do this well – but computational systems do not. The curriculum of the knowledge sciences

must make it clear that pragmatism is rooted in the real-time experience. This point returns us to the work by Robert

Rosen in the nature of formal systems.

Rosen’s work is indeed difficult and must be studied for some time in

order to see how his work on theoretical biology and category theory leads into

an understanding of how the experience of knowledge might be formalized. So the K-12 curricular elements must develop

a conceptual framework in which this type of difficult work can be addressed by

at least some portion of the population.

A central issue

facing general systems theory is about whether or not a class of

transformations can be characterized using methods more powerful than numerical

models. Can our present notion of computation be extended in certain useful

ways? In fact, Zabezhailo et al (1995)

demonstrated to the author that a useful predictive theory of biochemistry is

realizable. Zabezhailo used special

logics that perform "iconic computations". These computations are carried

out using the special inference operators of Quasi Axiomatic Theory (QAT)

(Finn, 1991, 1995) in the context of Soviet work on biological and chemical

warfare based on bio-chemistry.

A complete

predictive theory of biochemistry is in the future. For example, iconic structure might be inducted using a process

that is quite different from that which constructs first the integers and then

the Hilbert mathematics (see Goldfarb, 1992, work on inductive

informatics).

Paths in

"iconic" space could, of course, be analogous to trajectories in

numerical state spaces; however, these iconic trajectories should be lawfully

constrained by the information in a database containing the results of specific

analysis of biochemical structural activity relationships. Pospelov (1986;

1995) referred to these trajectories as syntagmatic chains.

Other scholars and

logicians have worked on related issues.

For example, the relationship between canalization in simplicial

complexes (Johnson, 1996) and structural adacity (Burch, 1989) in artificial

life systems provide a means for the simulation of some of the mechanisms that

are enfolding the individual state transitions of computational agents in a

complex artificial ecosystem. But it

should be noted that much of the work in artificial life treats the state

transitions as if there is an experience of state, and our position is that

there is no evidence that an experience is occurring.

The tri-level

architecture is interesting to us because the substructural level is considered

to be the atoms that when aggregated together under the influence of templates

produce the emergent phenomenon in the form of compounds. Thus we were increasingly hopeful that a new

generation of open systems engineering would be achieved with the tri-level

architecture.

Section 6: Application of stratified theory to information production

One approach to

knowledge representation and management assumes the existence of a table

(database) where the system states, i.e. those states that a compartment can

assume, are all specified and related to a database of subfeatures. We

construct a system that is specified in a formal fashion and which is

computable. In this case, a theory of the world is provided in the form of

computer program.

Within the

compartmental boundary of this program, an underlying ontology can assume

different system states and thus one might capture how the meaning of terms may

drift. The rules that govern ontology allow a modification of the sense of the

terms. This gives us hope that issues of interlingua and pragmatic type can be

addressed within individual compartments.

However, the procedures for the formation and dissolution of these

programs must allow for the limitations that formal systems have in general and

that a specific program has in particular.

The system should allow for emergence and control of emergence from an

environment. How is this accomplished?

Machine

intelligence can have a layered architecture where data constructions in one

layer are combined to produce flexible concepts using partially defined

relationships. An inductive analysis of the situation is then possible, where a

fusion of data occurs based on the accommodation of characterizations of the

novelty of the situation with characterizations of the background knowledge.

Complex natural

systems are open systems that (1) are embedded in a larger space (2) are

composed through an assembly process, (3) have behavior properties with

internal and external work cycles, (4) can be described as variably stratified

(Figure 7).

Figure 7: The Target of Investigation is observed a number of

times

Because of these

four properties, the Target of Investigation can be observed a number of times

T = { O1 , O2 , . . . , On

}

·

each

"observation", O, of the target has a "bag" of properties P

= {p1 , p2 , . . . , ps}. The cardinality of

the bag of properties is finite (but open).

·

each

"observation", O, is composed from a "bag" of basic

elements A = {a1 , a2 , . . . , as}.

The cardinality of the bag of elements is finite (but open).

·

logical

hypothesis (J.S. Mill) can be asked

1.

does

an observation have (not have) property p

2.

for

each basic element, ai , is ai cause of (or not a cause

of) the property p

The observations

produce the categories of atoms and event templates. Of course, the descriptions of properties and the descriptions of

basic elements of the target of investigation must be possible.

The quality of any

automated reasoning system is a function of its power to reveal the basic

signature of a situation under investigation (see Ritz and Huber, 1996). Producing signatures requires

instrumentation, measurement, representation and encoding as well as a number

of other steps. However, a number of

different methods may produce reasonably good results, and given reasonable

good initial results it is possible to use additional methods to develop a

reification process and consequently a high quality ontology that represents

the structural characteristics of the basic signature.

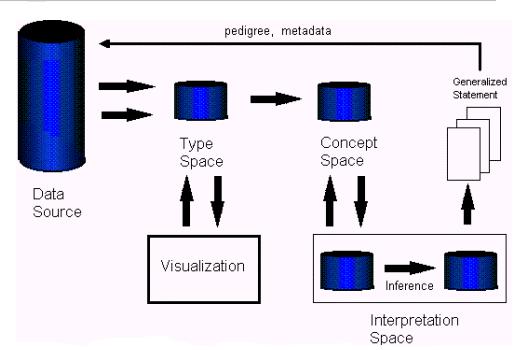

The block diagram

in Figure 8 is open to architectural instantiations of computational systems

that duplicate the structural mechanisms that experimental neuropsychology

suggests are used by biological systems.

Reification processes can be developed by following this diagram

Figure 6: Block diagram for Situational Analysis

First, some sort of

instrumentation is required to have a data source. Then a data source is attended to by a system using perceptional

measurements about properties and features.

These properties and features are represented, approximated, as elements

of a set of primary components.

The separation of

properties and features is vital in establishing correlations between the

presence or absence of features and the properties of the event. The separation is thus taken to be a

stratification where features are one level of organization and properties of

the event are at a middle layer between the feature level and a level that is

defined by environmental constraints.

One can misunderstand the notion of stratification in this context. The features are properly “atoms” whose

identity has been altered by an aggregation of a set of atoms into an

object. That object haves properties,

partially determined by the atoms that compose the object.

As a practical matter

the full understanding of stratification, as exemplified in quantum mechanics

or in linguistic double articulation (Lyons, 1968), is not necessary in order

to produce value from the block diagram.

In fully stratified theory, the atoms are combined into a compound which

has properties that are partially established by environmental constraints AND

the process in which the compound comes into a separate existence as a

compound.

Stratification is essentially

already present in many machine-understanding techniques. It is seen in the separation of token

occurrence and text unit in latent semantic indexing (Dumus & Landauer,

1992). Latent semantic analysis

measures the distributed co-occurrence of elements within units. Other types of categorization and feature

extraction type techniques exist in great numbers. For example, a simple theme vector transformation of a document's

content produces a representation (Rijsbergen, 1979; Prueitt, 1996b) of the

linguistic variation in text. The

variation can also be captured using statistical models such as the hidden

Markov model. In each of these

techniques, some element of stratified theory can be seen.

Looking directly at

the variation itself can be shown using the category theory developed by

Prueitt in 2001 and 2002. This work is

called categoricalAbstraction (cA) and eventChemistry (eC). Because of the importance of the work

completed previous to 2001, this work will only be treated lightly in this

book. We anticipate a follow up book on

event chemistry. The point is that

linguistic variation itself is a phenomenon to be observed by any method that

reveals its character. Once linguistic

structure is observed and reified, then an event knowledge base can be

constructed and one can apply the tri-level architecture directly to predictive

analysis of event structure pointed at by the linguistic variation. One need not use cA and eC methods.

Theme vector

representation may also have some correspondence to how the brain processes

information. The Gabor representation

and transform may play a role in the human cortex (see Prueitt, 1997 for

literature review). The type space is

then projected into a system for visualization. In the case of human perception, this projection is a reentrant

bi-projection between the lateral geniculate nucleus and the layered visual

cortex. But we have to be careful here,

because Hilbert space type mathematics does not handle the phenomenon of

measurement and emergence as well as one might like.

One indication that

Hilbert formalism is limited is seen by observing how difficult machine

intelligence has been. Very few

software systems have visualization of theme (feature) space as well as an

integrated representation of knowledge in the form of a concept database.

Concept databases exist in a few cases (Abecker, et al, 1997), but are

primarily restricted to mechanical systems or industrial plants. Software

packages such as Spires, developed by Pacific Northwest National Laboratories,

makes projections into feature spaces based on cluster analysis. But a process of reification and ontology

building has not, as yet, lead to demonstrations of event knowledge bases

having the agility and novelty detection that we predict from the Orb

systems.

Our scientific

challenge is to see both computational visualization and human perception

within the same paradigm. This may not be so difficult. A large amount of

experimental research exists and some of this has been integrated into our

explanatory framework. With stratified theory we see new computational

projection schemes based on situational semantics. The object of visualization

might be related not only to a themespace but also a concept space. And the visualization can be controlled

within the tri-level architecture so that as the visualization occurs it is

possible to allow the environment to make small perturbations in the event

representation.

The study of the

framework suggests that the human brain achieves the formation of concepts

through a distributed disassembly and reassembly of representational features

(Figure. 7). This framework is illustrative of a general systems property

regarding the emergence of operational wholes within ecosystems. It is also suggested that the human in

vitro concept space is a virtual space in the sense that the space does not

actually ever exist in total in any specific circumstance. Parts of this

virtual space come into being while other parts are blocked by various types of

competitive cooperative network dynamics. Of course, this simple architecture

disguises the complexity of how the brain uses both its neural architecture and

its chemical composition.

Figure 7: The niches in ecosystem share in the common use of a

finite class of natural type.

Machine

intelligence based on stratification might be simpler than human intelligence

and yet share behavioral features. For

example, projection from a complete enumeration of a knowledge engineering type

concept space can be made onto a concept subspace. In the block diagram this is called an interpretation space. This

subspace may be mirrored by activation of components of a situational model

supporting automated reasoning. The mirrors can be maintained by a neural

network associative memory as demonstrated in any number of neural network

architectures.

The mirrors are

two-way, and as a consequence a separate mapping back to a concept subspace is

made after an inference engine has changed the state space of the situational

model. This notion of projections and mirrors between representational spaces

is a reasonable first model for the production of computer based situational

models. Before 2002, there are a number of scientific issues that are not yet

resolved. The structural form of a

computer based concept space had not been worked out. Simple themespaces had

been defined, but a new class of objects was needed in themespaces. We expect these objects to create

topological distortions of the otherwise flat Euclidean space, and instantiate

a theory of structural operators defined in coincidence with inference engines.

Section 7: Generalizing a model of human memory and anticipation

The voting

procedure reflects a correspondence between human perceptional process

(involving memory, experience and anticipation) and a proposed computational

architecture for routing informational bits, knowledge artifacts, from

locations and to locations. The voting

procedure (Appendix) is the simplest form of the Russian extension of Mill’s

and Peircean logic. It has a data

structure and a process model that operationalize the correspondence between

the stratified processes involved in human memory, experience of mental

awareness, and human anticipation.

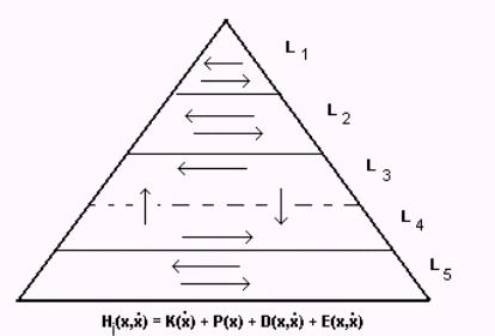

From this

correspondence, it is possible to build a model of human memory. This model is

a computational model consistent with von Neumann computing, but is organized

into three separate levels. In the model, we stipulate a set of mechanisms that

create a decomposition of experience into minimal invariance thought to be

stored in different regions of the brain (Schactner & Tulving, 1993). The

evidence for these models, of natural systems, is grounded in experimental

science, whereas the computational model is grounded in computer science. The use of the model requires a human in the

loop to reify the event structure.

The physical

process that brings the experience of the past to the present moment involves

three stages.

1) First, measured states of the world are parceled into substructural

categories.

2) An accommodation process organizes substructural categories as a

by-product of learning.

3) Finally, the substructural elements are evoked by the properties of

real time stimulus to produce an emergent composition in which the memory is

mixed with anticipation.

Each of these three

processes involves the emergence of attractor points in physically distinct

organizational strata. How does

emergence come to exist, and what material substance is combined together

during the emergence process? How does

the ecological affordance of the environment come to constrain this aggregation

process? These questions have been

treated in general within various scientific disciplines however, we treat the

issue of emergence in a semiotic fashion, with an eye on how sign systems

assist in human communication and comprehension.

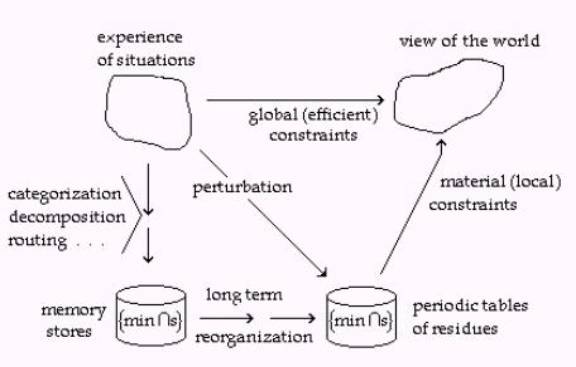

Figure 7: The process flow that we take as an accurate model

of human memory formation, storage and use.

The Voting

Procedure considers questions about the "entailment" (entailment is a

generalization of all classes of causes, formal (logical), material, efficient

and final) of natural symbol systems. A symbol grounding provides a detailed

reference to distributions of potential meaning. Obtaining the specification of

these distributions is a matter of observation using an information aggregation

process. Set theory is then used to

support inference.

A class of minimal

invariants (called minimal intersections) is constructed from the

representational elements produced through measurement processes. These invariants are then used as the logical

atoms from which axiomatic situational logics are produced.

The investigation

of entailment must begin with the identification of most of the basic elements

that are involved in causing the situations under investigation. The process is

called "descriptive enumeration". Complete and consistent descriptive

enumeration requires the extraction of minimal elements to ground a system of

signs pointing to the causes of a situation.

For example, if we are considering chemical reactions and properties of

chemicals in complex situations, then these minimal elements are the signs of

either the atoms themselves or larger groups of compounds that occur as an

invariant across multiple situations.

In a similar

fashion, a situational logic can be constructed that relates the presence or

absence of these minimal elements to the properties of situations (Mill, 1843).

The situational logic is completed when plausible and reliable truth

evaluations are defined on a set of well formed formula having logic atoms

corresponding to those non-empty minimal intersections of causal elements found

through a discovery process.

So there are three

steps,

1.

the

identification of a set of minimal elements that could serve as causation of

properties, and

2.

the

development of a situational logic that predicts the properties of situations

given a partial or complete list of those minimal elements that are present in

the situation.

3.

the

maintenance of a second order system for changing the intermediate language to

accommodate new information.

The first of these

steps are addressed using a version of tri-level logical argumentation,

relating structure to function. This argumentation can be adapted to developing

the computational memory required for automated text understanding and

situational analysis. The third step requires a special interface to human

experts.