<Book

Index>

Chapter 12

Knowledge Technologies and the Asymmetric Threat

Section 1: Demand-side Knowledge

Process Management

New

methodology allows the measurement of world wide social discourse on a daily

basis. General systems properties and

social issues involved in the adoption of this methodology are outlined in this

paper. Technical issues related to

logic, mathematics and natural science are touched on briefly. A full treatment requires an extensive

background in mathematics, logic, computer theory and human factors.

Community

building and community transformation have always involved complex processes

that are instantiated from the interactions of humans in the form of social

discourse. Knowledge management models

of these processes involve components that are structured around lessons

learned and lessons encoded into long-term educational processes. As a precursor to our present circumstance,

for example, the Business Process Reengineering (BPR) methodologies provide for

AS-IS models and TO-BE frameworks. Over

the past several decades, additional various knowledge management disciplines

have been developed and taught within the many knowledge management

certification programs. However, human

knowledge management at the level of individual empowerment has not generally

been part of the BPR methodology or knowledge management certification

programs.

New methodology allows the

measurement of world wide social discourse on a daily basis. General systems properties and social

issues involved in the adoption of this methodology are outlined in this paper.

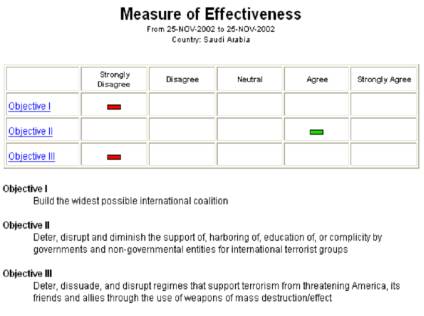

Figure 1: Experimental system

producing polling like output

The

complex interior of the individual human is largely unaccounted for in

measuring the thematic structure of social discourse. But the individual is where demand for social reality has its

primary origin. So something has been

missing. What is missing is something

that is missing from information technology.

What is missing in information technology systems is the available

science on social expression and individual experience. Why individual variation in response

patterns, for example, is not being accommodated by information technology is

due to many factors. Some of these are

technical issues related to limitations in design agility, and some of these

are related to the nature of formal systems themselves.

So

in light of these limitations, we have to be reminded that human tacit

knowledge expression occurs in human dialog, even if the fidelity of this

expression is some times low and sometimes high. Natural language is NOT a formal system. Yes, abstraction is used in spoken language;

but a reliance on pointing and non-verbal expression helps to bring the

interpretation of meaning into a pragmatic axis that exists within the

discussion, as it occurs and is experienced.

Written language then extends a capability to point at the non-abstract,

using language signs, to what is NOT said but is experienced. Human social interaction has evolved to

support the level of understanding that is needed for living humans, within

culture, to form social constructs. But

computer based information systems have so far failed to represent human tacit

knowledge, even though computer networks now support billons of individual

human communicative acts, per day, via e-mail and collaborative

environments.

There

is a mismatch between human social interaction and computers. How is the mismatch to be understood? We suggest that the problem be understood in

the light of a specific form of complexity theory.

Computer

science is a subset of mathematics, and mathematics is expressed in formal systems. The near future holds an evolution of

mathematics that is similar to the weakening of category theory by the use of

rough sets, and the weakening of logic by quasi-axiomatic logic. These evolutions move in the direction of a

stratification of formal systems into complexity theory. A number of open questions face this

evolution, including the re-resolution of notions of non-finite, the notion of

an axiom, and the development of the understanding of human induction (seen as

a means to "step away from" the formal system and observe the real

world directly).

It

is via this weakening of constructs lying within the foundations of logic and

mathematics that an extension of the field of mathematics opens the way to a

new computer science. But as this happens,

we must always guard against allowing computer science to make claims about

such things as "formal semantics" and "machine

awareness". Why? Because computer science is based on

categorical abstractions - which when confused to be the same as objects that

exists in physical reality leads to error.

Computer science has a proper place, and should remain in its place and

not compete as if it were a natural science.

Side note on the

AI failure

The “artificial intelligence” failure can be viewed, and often is, as

simply because humans have not yet understood how to develop the right types of

computer programs. This viewpoint is an

important viewpoint that has lead to interesting work on computer

representation of human and social knowledge.

However, we take the viewpoint that a representation of knowledge is an

abstraction and does not have the physical nature required as an “experience”

of knowledge. The fact that humans

experience knowledge so easily may lead us to expect that knowledge can be experienced

by an abstraction. And we may even

forget that the computer program, running on hardware, is doing what it is

doing based on a machine reproduction of abstract states. These machine states are Markovian, a mere

mathematical formalism. By this, we

mean that the states have no dependency on the past or the future; except as

specified in the abstractions that the state is an instantiation of.

There is no dependency on the laws of physics either, except as encoded

into other abstractions. This fact

separates computer science and natural science.

The tri-level architecture, developed by Prueitt in

the mid 1990s, attempts to model the relationship between memory of the past,

and awareness of the present, and the anticipation of the future. However, once this machine architecture is

in place, we still will be working with abstraction and not a physical

realization of (human) memory or anticipation.

Optical computing or quantum computing may change this, but these

“stratified” computational systems are not well understood as yet.

Stratification seems to matter, and may help on issues

of consistency and completeness, the Godel issues in formal foundations to

logic. Already connectionism models of

biological intelligence have realized some aspects of the tri-level

architecture. But the clean separation

of memory functions and anticipatory functions allows one to bring the

experimental neuroscience and the cognitive science into play. For example, memory and anticipatory systems

are being modeled as separately caused by interactions with a world, external

to the computer program. Awareness binds parts of these systems together in a

present moment, having a physical and pragmatic axis.

This external, to the computer, world is not an abstraction. The measurement of the physical world

results in abstraction; in the tri-level architecture the measurement of

invariance is used to produce a finite class of categorical Abstractions (cA).

These cA atoms have relationships that are expressed together in patterns and

these patterns are then expressed in correspondence to some aspects of the

measured events. The cA atoms are the

building blocks of events, or at least the abstract class that can be developed

by looking at many instances of events of various types.

Anticipation is then regarded as expressed in event chemistries and

these chemistries are encoded in a quite different type of abstraction similar

in nature to natural language grammar.

We

argue that the development of categorical abstraction and the viewing of

abstract models of social events, called “event chemistry”, are essential to

the protection of national security.

Distributed community processes are supporting asymmetric threats to

American national security and to the security of other nation states.

There

is a national obligation to develop response mechanisms to these threats. The response must start with proper and clear

intelligence about the event structures that are being expressed in the

social world. Human sharing of tacit

knowledge must lie at the foundation of these response mechanisms. Maturity and principle must guide our use of

this foundation.

Understanding

the difference between computer-mediated knowledge exchanges and human

discourse in the “natural” setting is critically important to finding a measure

of security within a social world that has been changed by globalization. One of our challenges is due to advances in

warfare capabilities, including the existence of weapons of mass

destruction. Another obvious challenge

is due to the existence of the Internet and other forms of communication

systems. Economic globalization and the

distribution of goods and services presents yet another set of challenges that

delineate the present and the future from the past. If the world social system is to be healthy, it is necessary that

these security issues be managed.

Event

models, to be derived from the data mining of global social discourse, will

define a science that has deep roots in legal theory, category theory, logic,

and the natural sciences.

The

current natural security requirements demand that this science be synthesized

quickly from the available scholarship.

Within this new science, stratified logics will compute event

abstractions, at one scale of observation and event atom abstractions at a

second scale of observation. The atom abstractions are themselves derived from

polling and data mining processes in order to create the abstractions.

Note on privacy

issues

Developing a stratification of information into two layers of analysis.

The first layer is the set of individual polling results or the

individual text placed into social discourse.

In real time, and as trended over time, categorical abstraction is

developed based on the repeated patterns within word structure. Polling methodology and machine learning

algorithms are used.

The second layer is a derived aggregation of patterns that are analyzed

to infer the behavior of social collectives and to represent the thematic

structure of opinions. Drilling down

into the specific information about, or from, specific individuals will require

government analysts to make a conscious step and thus the very act of drilling

down from the abstract layer to the specific informational layer is an enforceable

legal barrier that stands in protection of Constitutional Rights.

Given

the recognition of human privacy rights, what will bring human tacit experience

more easily into distributed community processes? This issue of tacit knowledge is a core challenge to knowledge

management methodology and technology.

But for this paper, the context is the natural security.

What

science/technology is needed to “see” the private knowledge of events that lead

to or support terrorism? Perhaps, we

need something that stands in for natural language? Linguistic theory tells us that language use is not reducible to

the algorithms expressed in computer science.

But if “computers” are to be a mediator of social discourse, must not

the type of knowledge representation be more structured than human

language? What can we do?

The

answer to the question of degree of structure is the most critical inquiry that

technologists and scientists must develop a consensus about. According to our viewpoint, the computer

does not have tacit knowledge to disambiguate natural language, in spite of

several decades of effort to create knowledge technologies that have “common sense”. Based on principled argument grounded in

quantum neurodynamics, a community of natural scientists has argued that the

computer will not have tacit knowledge because tacit knowledge is something

experienced by humans. Our claim is

that there is no known way to fully and completely encode tacit knowledge into

rigidly structured standard relational databases. A large body of experimental

work in the natural sciences suggests that computers alone, no matter the

funding level, cannot do this.

A

work around for this quandary is suggested in terms of a Differential Ontology

Framework (DOF) that has an open loop architecture showing critical dependency

on human sensory and cognitive acuity.

2:

Differential Ontology Framework (technical section)

Computers

are not now, and likely will never be cognitive in the same way as humans

are. However, the notion that

cognitive-like processes might be developed has brought some interesting

results. At the core of the solutions

that are been, or are likely to soon be, made available is a class of the

machine-encoded dictionaries, taxonomies and what are often called

ontologies. While encoded dictionaries,

taxonomies and ontologies are often referred to as knowledge representation,

one has to be careful to point out that knowledge is “experienced” and that

these computer data structures are experienced only when observed by a

human. Some experts in the field

express the notion that computers do or will experience, and this is the

so-called strong AI position.

The

rejection of strong AI opens up an understanding that knowledge definition,

experience and propagation requires a greater degree of agility and

understanding by users of the processes that have been developed by the strong

AI community over the past 50 years.

There has been a failure to communicate.

The

strong AI community, on the other hand, should eventually come to support the

principled arguments made in the quantum neuroscience community. Quantum neuroscience has an extensive

literature that reports on issues related to human memory, awareness and

anticipation. This literature is

referenced in a separate technology volume, simply because the purpose of this

paper is to develop concepts not to provide what should be a complete citation

of the literature (as opposed to a very partial citation that might be

misleading.) However, we point out that

the quantum neuroscience literature addresses the question of how things happen

and in doing so this literature challenges classical Newtonian physics as a

model of natural complexity.

The cause of events has a

demand and a supply component.

Demand-side knowledge process management can be used to create

machine-encoded ontologies. These

formal constructs, that are the states of computer programs, are constructions

that exist in two forms, implicit and explicit. Implicit constructs are defined as proper continuum

mathematics. The constructs of

continuum mathematics are represented on the computer in a discrete form. The discrete form of implicit ontology has

the benefit of the precision of the embedded formal continuum mathematics, seen

in mathematical topology, mathematical analysis. Demand is a holonomic, e.g., a distributed, constraint like

gravity and so a distributed representation is needed. The continuum mathematics provides the

distributed representation that the computer “cannot” provide by itself.

An explicit ontology, on the

other hand, is in the form of a dictionary or perhaps a graph structure. The constructs of explicit ontology are

expressed as discrete mathematics, graph theory, number theory, and predicate

logics. The interface between discrete

mathematics and continuum mathematics has never been easy, so one should not

expect that the relationship between implicit and explicit ontology be easy

either. However, a formal theory of

by-pass has been developed, by Prueitt, which shows a relationship between

number base conversions, data schema conversions, and quasi-axiomatic theory

[1].

First-order predicate logics

are often developed over the set of tokens in some of the explicit ontology

that exists, for example by using a standard resource description framework

(RDF). Value is derived, and yet these

ontologies with their logics suffer from the limitations of explicit enumeration

and relational logics. This limitation

is expressed in the Gödel theorems on completeness and consistency in formal

logics [2] as well as in other literatures.

By-pass theory is designed to manage this problem.

The bottom line is that high

quality knowledge experience and propagation within communities needs the human

to make certain types of judgments. But

the methodology for human interaction with these structures is largely missing,

and is certainly not known by most of those who need now to use these

structures for national intelligence vetting.

We introduced the term

“Differential Ontology” to talk about a human mediated process of acquiring

implicit ontology from the analysis of data.

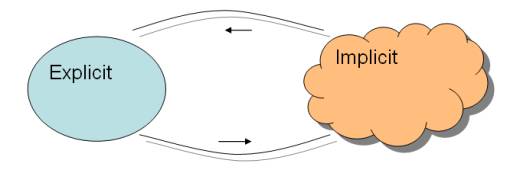

Figure 1: Differential Ontology Framework

By the expression “Differential

Ontology” we choose to mean the interchange of structural information

between Implicit (machine-based) Ontology and Explicit (machine-based)

Ontology.

•

By

Implicit Ontology we mean an attractor neural network system or one of

the variations of latent semantic indexing.

These are continuum mathematics and have an infinite storage capability.

•

By

Explicit Ontology we mean an bag of ordered triples { < a , r, b > }, where a and b are

locations and r is a relational type, organized into a graph structure, and

perhaps accompanied by first order predicate logic.

The differential ontology

framework uses implicit ontologies now found in stochastic and latent semantic

indexing “spaces” and derives a more structured form of dictionary type

ontology. The class of process

transformations between implicit and explicit forms of machine ontology is to

be found in various places. For

example, we have seen market anticipation in the large number of automated

taxonomy products that are appearing in the marketplace. However, we claim that the notion of

deriving explicit ontology from implicit ontology is an original

contribution.

Within the explicit ontology

there are localized topics. These

localized topic representations “sit’ by themselves. In the implicit ontology

the information is distributed like gravitational wells are in physical

space. Move anything and everything

changes; sometimes only minutely and sometimes catastrophically. The perturbations of representation are then

formally seen as an example of a deterministically chaotic system. Natural systems may or may not be

deterministic, and thus the argument is that formal chaos is not the same as

the process seen in the emergence of natural systems in the setting of real

physical systems. Neural network type

attractor manifolds is an early form of the implicit ontology. But now we have also genetic algorithms, the

generalization of genetic algorithms in the form of evolutionary programming

and other mathematical constructs. The

tuning of these systems to the physical reality is thus of major

consideration. This tuning is not a

done-once for-all-time task. As the

world changes, the tuning of implicit ontology must change also. How is this to be done?

Moving between these

implicit and explicit forms of machine ontology "state gesture

mechanisms" can be attached to topic constructs, whether distributed or

localized, using new type of “stratified” architecture [4-6] that is grounded

in cognitive neuroscience, specifically in the experimental work on memory and

selective attention [7,8]. The

stratified architecture has multiple levels of organization, expressed as an

implicit or explicit, continuum or finite, state machine, in which within each

level certain rules and processes are defined.

Cross level interaction

often MUST involve non-algorithmic [9] movement within the state spaces. Thus a human, who CAN perform

non-algorithmic inference, is necessary if the over all differential ontology

system is to stay in tune with the external complex world. This means that implicit and explicit

formalism is NOT sufficient without real time human involvement. A state gesture mechanism drives the

information around that part of the system that lives in the computer, but the

demand for this supply comes from within private personal introspection,

perception and decision-making by humans.

Again, our viewpoint seeks to return responsibility, for control actions

in the world, to the human and push away the notion that the crisp and precise

states within computer information systems can reasonably be managed outside of

this responsibility.

State

gesture mechanisms allow the machine ontologies to be “assistant-to” human

decision making. The mechanism is not a

closed formalism or an information technology, but rather an intellectual

framework with stratified theory and cognitive neuroscience as the framework’s

grounding [10].

Following

architecture, and design of a related applied semiotics theory developed by

Pospelov and his colleagues [11], the state gesture mechanism itself is deemed

largely subjective. This means that the

mechanism is not reducible to algorithms.

A ‘second order’ cybernetic system is required that is primarily

controlled by direct human intervention.

So the machine ontologies are required to be sub-servant to a human

knowledge experience process. The

details of the machine ontologies are visible as reminders or signed informants

within a sign system or natural language.

It is for this reason that one can call this a knowledge operating

system.

Elements of

micro-ontologies, that are formative within the moment, act as signs about the

knowledge process that are regularly occurring in social networks. The differential ontology framework requires

a human interaction with these signs.

That is the Demand side.

Ontologies are streamed using formatted micro-ontologies from point to

point in the knowledge ecosystem resident to the community of practice. This is the Supply side.

A computational mirror of

the state gesture mechanism is also “assistant-to” the location where

information is moved within the enterprise.

So the mechanism acts as an automated reporting and assessment

technology. This assistance has great

value to social and economic processes.

Productivity goes up. Social

value goes up. The fidelity of

knowledge representation goes up.

Return on investment goes up.

Responsibility can, and

should, be assigned exactly where responsibility is situated. This is true, in particular, where

Constitutional rights to privacy are involved.

A knowledge operating system, such as the Knowledge Sharing Foundation,

can provide complete transparence to all instances where a human examines

private knowledge.

As an example, an evocative

question can be answered in various ways.

The answers made are part of a natural language generation capability

that involves the emergence of word structure from a mental experience. This emergence must involve a human

awareness, and the action of requesting the computer supporting processes can

be a trigger to reporting mechanisms about the inspection of private

information. This the barrier to

inspection is transparent.

The structure of

differential ontology mediated communication allows a mechanical roll-up of the

information into one of several technologies for natural language generation

from semi-structured information.

However, the process is formally underdetermined, and some constraints

must be imposed during the construction of the natural language response. One sees the same situation as the

mathematics of the wave functions in quantum mechanics. The formal mechanism for “collapsing the

wave” and realizing locality cannot be derived from the classical notions, and

perhaps cannot be derived at all in a single formal system (having both

completeness and consistency). Many

post-modern scholars of physics have equated this problem with the notion of

finding a single unified theory of everything [14].

Differential

micro-ontologies represent the first of a new generation of more complete

(supply side plus demand side) knowledge process management paradigms. Machine based ontologies generate natural

language that communicates to users in a way that is familiar. This drives knowledge sharing in a new

way.

What is surprising about

this vision is that it is NOT the popular Artificial Intelligence vision of the

future. The “real” future brings the

human more fully into contact with other humans within what is essentially a

Many to Many (M2M) communication device.

3: Many to many

communication

The television industry is a

one to many communications device. One

community, having some diversity within the group, uses the television to

express that group’s views of the world.

There is a well-defined community boundary, having a complex interior

and community membership. The community

is diverse but nevertheless, the group represents one type of person. There is something in common within the

group. The commonality is not something

that is always recognized. It is organically evolved as a general system

property rather then by the explicit intent of those who choose to be a member

of this community. Communities have “autopoietic”

structural coupling, in the sense defined by Maturana and Varela [13],

reinforced by the economic system and by the limitations of the television

itself. Specific structural coupling

has formed, again organically, due to the presentation aspect of television

where one group develops media and then markets this product in a

marketplace.

The television is not the

only one to many communication device.

Books and radio industries also have specific structural coupling that

reinforces a presentation of viewpoint within a marketplace. One might think that writing a book is

something that anyone can do, and if the book is a good book then other people

will read the book. However, this

common perception does not account for the barriers to entry that the book

industry has organically evolved. Other

one to many communication is under attack from many quarters and for many

reasons. Command and control

institutions (such as the Cold War type military organizations, and media

outlet industries) have a natural resistance to these attacks. However, these institutions must meet the

present challenge by renewal and by adoption of general systems thinking and

behavior.

American has a strong

multi-cultural identity, as well as a treasured political renewal mechanism

(e.g., the Presidential elections every four years). Thus the reinforcement of multi-cultural social theory seems the

likely consequence of the current challenges from fundamentalism as expressed

in reductionist science, and in religious and economic fundamentalism. Fundamentalism does not have a renewal

mechanism, as the history of religions shows.

Specific ignorance and specific mythology is held onto in spite of

contrary evidence. Deeper values

related to spiritual beliefs are more complex and are, likely, renewed within the

private sphere of individual self-image.

But social structures, churches and religious institutions, tend to not

renew.

Asymmetric threat comes from

non-government organizations.

Challenges emerge from these social systems because this social system

serves some purpose that the nation states do not serve. However, in the countries of the Third

World, the basic needs of individuals are often perverted by hunger, economic

injury and cultural insult [15].

Understanding the diverse opinions of non-governmental social systems is

thus the single most important response that the United States Administration

can make to the challenge of reducing the causes of terrorism.

It is natural that the

social origins of asymmetric threats will use new forms of many to many

communication in order to attack the vulnerabilities of a system where a large

number of social organizations has organically developed around the economic

value of one to many communications systems.

Developing agility and fidelity to defense information systems is the

strongest defense to these asymmetric threats.

This defense strategy applies to asymmetric information warfare, as well

as to the infrastructures that support mainstream command and control

systems.

In the 20th

century, many subsystems of the economic order have developed economic

structural coupling to organically developed one to many technology. The shift to many to many communications

tools is then essential, and yet inhibited.

This enigma must be sorted out.

The differential ontology

framework enables processes, which have one to many structural coupling, to

make a transition to a many to many technology. This is where the enigma is most fully seen. The asymmetric threat is using many to one

activity, loosely organized by the hijacking of Islam for private hatred and

grief. It is a new form of the “people

arise to over throw the unjust government”.

The defense to this threat is the development of many to many

communication systems, and the related notion of categorical abstraction and

event chemistry.

The many to many

technologies allow relief from the stealth that many to one is given from the

perception of a fully developed and mature economically reinforced system

having one to many mechanisms. The

relief comes when machine mediation allows the formation of differential

ontology as a means to represent, in the abstract, the discussion being made by

organically self identified social structure.

This representation is done via the development and algorithmic interaction

of human structured knowledge artifacts.

The evolution of user

structured knowledge artifacts in knowledge ecosystems must be validated by

community perception of that structure.

In this way the interests of communities is established through a

private to public vetting of perception.

Knowledge validation occurs as private tacit knowledge becomes

public. The relief from the asymmetric

threat evolves because a computer-mediated formation of a defense community

structure is facilitated and once this community structure exists in this form,

communication traffic analysis provides selective attention to most of the

threatening events from non-governmental entities. For example, a new social community cannot form outside of the

perceptional field of pre-existing communities that already have established

structural coupling within well-defined economic entities. National

surveillance systems have a way to see the threat.

The validation of artifacts

leads to structured community knowledge production processes and these

processes differentiate into the three levels of economic processes [16]. However, the validation process can be

addressed unwisely.

4: The role of Communities

of Practice

Individual humans, small

coherent social units, and business ecosystems are all properly regarded as

complex systems embedded in other complex systems. Understanding how events unfold in this environment is not easy.

Schema independent data

representation is required to capture the salient information within implicit

ontologies. The class of latent semantic index techniques is one class of

examples of representation of information without a database schema; see the

topic map standard [11].

The current standards often

ignores certain difficult aspects of the complex environment and attempts to:

1)

Navigate

between models and the perceived ideal system state, or

2)

Construct

models with an anticipation of process engineering and change management

bridging the difference between the model and reality.

The new knowledge science

changes this dynamic by allowing individuals to add and subtract from a common

knowledge base composed of topic / question hierarchies supported within the

differential ontology framework. The software

enterprise is hidden from the user in two ways. First, a community process validates the formation of the core

knowledge base. This is a social

experience, not a technology. The core

knowledge base consists of reusable components which have the form of topic /

question pairs within a hierarchical ontology.

Second, the knowledge base is used via a simple viewer/controller that

works through web browsers.

The technology becomes

transparent and does so because information technology has matured and been

refined and made a ubiquitous and stable commodity.

This presentation will close

as we address a specific conceptual knot and untie it by separating issues

related to natural language use.

Language and linguistics are relevant to our work for three reasons.

First, the new knowledge technologies are an extension to natural

spoken languages. The technology

reveals itself within a community as a new form of social communication.

Second, we are achieving the establishment of knowledge ecosystems

using peer-to-peer ontology streaming.

Natural language and the ontologies serve a similar purpose. However the ontologies are specialized

around virtual communities existing within an Internet culture. Thus ontology streaming represents an

extension of the phenomenon of naturally occurring language.

Third, the terminology used in various disciplines is often not

adequate for interdisciplinary discussion.

Thus we reach into certain schools of science, into economic theory and

into business practices to find bridges between these disciplines. This work on interdisciplinary terminology

is kept in the background, as there are many difficult challenges that remain

not properly addressed. To assist in understanding this issue, general systems

theory is useful.

These issues are in a context. Within this context, we make a distinction

between computer computation, language systems, and human knowledge events. The

distinction opens the door to certain theories about the nature of human

thought. Through a body of theory one can ground a formal notation defining

data structures that store and allow the manipulation of topical taxonomies and

related resources existing within the knowledge base. Establishing the knowledge sciences will do this.

In Summary: The differential ontology framework consists of

knowledge units and auxiliary resources used in report generation and trending

analysis. The new knowledge science

specifically recognizes that the human mind binds together topics of a

knowledge unit. The new knowledge

science holds that the computer cannot do this binding for us. The knowledge science reflects this

reality. The rules of how cognitive

binding occurs are not captured into the data structure of the knowledge unit,

as this is regarded as counter to the differential ontology framework. The human remains central to all knowledge

events, and the relationship that a human has with his or her environment is

taken into account. The individual

human matters, always.

References:

[1] Finn, Victor (1991). Plausible Inferences and

Reliable Reasoning. Journal of Soviet Mathematics, Plenum Publ. Cor.

Vol. 56, N1 pp. 2201-2248

[2] Nagel, W and Newman, J. (1958). Godel’s Proof.

New York University Press.

[3] Prueitt, P.S. (1995) A Theory of Process

Compartments in Biological and Ecological Systems. In the Proceedings of

IEEE Workshop on Architectures for Semiotic Modeling and Situation Analysis

in Large Complex Systems; August 27-29, Monterey, Ca, USA; Organizers: J.

Albus, A. Meystel, D. Pospelov, T. Reader

[4] Edelman, G. M. (1987). Neural Darwinism.

New York: Basic Books.

[5] Prueitt, Paul S. (1996c). Structural Activity

Relationship analysis with application to Artificial Life Systems, presented at

the QAT Teleconference, New Mexico State University and the Army Research

Office, December 13, 1996.

[6] Prueitt, P. (1998). An Interpretation of the

Logic of J. S. Mill, in IEEE Joint Conference on the Science and Technology

of Intelligent Systems, Sept. 1998.

[7]

Levine, D. & Prueitt, P.S. (1989.) Modeling Some Effects of Frontal Lobe

Damage - Novelty and Preservation, Neural Networks, 2, 103-116.

[8]

Levine D; Parks, R.; & Prueitt, P. S. (1993.) Methodological and

Theoretical Issues in Neural Network Models of Frontal Cognitive Functions. International

Journal of Neuroscience 72 209-233.

[9] Prueitt, P. (1997b). Grounding Applied Semiotics

in Neuropsychology and Open Logic, in IEEE Systems Man and Cybernetics Oct.

1997.

[10] Penrose, Roger (1994). Shadows of the Mind, Oxford Press, London.

[11] Pospelov, D. (1986). Situational Control:

Theory and Practice. Published in Russian by Nauka, Moscow.

[12] Park, S; Hunting, S; and Engelbart S; (Editors)

(2002). XML Topic Maps: Creating and

Using Topic Maps for the Web. Addison Wesley

[13]

Maturana and Varela; (1989) The Tree of Knowledge

[14] Nadeau, R, Kafatos, M; (1999). The Non-local Universe, the new physics

and matters of the mind. Oxford

University Press.

[15] Albright, M; Kohut, A

(2002). What the world thinks in 2002, The Pew Global Attitudes Project,

The Pew Research Center for the People & the Press.

[16] Prueitt, Paul S.;

(2002). “Transformation of Knowledge

Ecology to a Knowledge Economy”, KMPro Journal.